My files are now uploaded. Good luck to anyone learning from this project; feel free to email me if you have questions.

The socket code I had created was having problems and I was considering whether or not I should use it in my presentation. I wondered what could be the problem, but I knew it was really close to being functional. While data was being transported across the network, it was coming out garbled -- it was playing slowly. I was working on this during the end of last week and the weekend. When I returned, I met with Dr. Pankratz to go over places where a problem might occur. Since I don't have a large amount of experience with the data structures used in the socket, maybe my problem was there. Does it matter that the socket is sending information as (char *)? I take data from buffers of type short and put them in the same type of buffers. It took a long time to go over all the places to check over. Dr. Pankratz recommended printing out the audio data to the screen on the server and client to make sure the data was making it across correctly. I also asked myself a question during our talk: "Am I absolutely sure that the size of my socket structures is equal to each chunk of my larger buffer?" If the answer is no, then that's probably the bug. If the sizes are not the same, the last part of the large buffer chunk will have bogus data in it. This would explain why I heard some of the sound at a slower rate: correct audio data was only in the first part of each large buffer chunk.

After going over the sizes a few times to ensure they are the same size, the sockets work correctly. The application pre-buffers audio data from the socket, then begins playing sound (emptying the buffer), and reading more from the socket (filling the buffer).

I modified the file playing application to use the larger buffer. It will be nice to show how different buffer sizes and slow file reads affect sound playback. The application also pre-buffers an large sample array of data. This lets it build up audio data before playing. It's nice to hear it play fine and then begin stuttering as its large audio buffer runs dry.

Dr. Pankratz and I went over strategies dealing with the large buffer my program will use. This buffer will be larger than the socket and audio device buffers so the program can play sound for a little while if the socket sends too slowly. Following his suggestion, I came up with ideas on what to do if data is coming in too fast or too slow. The program will have two buffer placeholders for where data can be added and removed. Each process will have to check before acting to ensure that the placeholders do not cross each other. The filling process (my main function) will make sure that it does not go over the removing process each time it wants to fill. The removing process will do the same. Each process makes sure it can act.

Dr. Pankratz suggested I read in a text file to ensure that my data was being transported correctly. After changing my buffers to char buffers, my text file was displaying. I also began to experiment with sleeping the server and client. If the client is sleeping longer than the server, the server will continue transmitting at its pace until the socket is full. When it is, the write command blocks and it sends again when the client has read from the socket. If the server is falling behind, it will wait until there is data before receiving from the socket. This seems to make sure that I won't lose any audio data during transit. There may be pauses though while the client or server are waiting. The audio device will be asking for data and if I don't have it, I'll have to output silence. When Dr. Pankratz and I noticed this, we discussed making the socket non-blocking. My tests show that if the socket is non-blocking and the client tries to read, it obviously won't get anything. I could play silence or the repeat the most recent sound data. There are lots of variables to play with. Another example that would show the loss of data would be inputting from the microphone. Somewhere down the line, the data could be discarded if a process is waiting. I'll work on that after I get the sound file data transported across the socket and sent to the audio device.

Dr. Pankratz and I discussed the current socket code I had. I need to change it to have a buffer that the sockets fill. My first attempts were prepared to send data to the audio device buffer right away. I began my making sure I knew how to initialize and use the NSData and NSMutableData data types because my data is transported down the pip in these structures. After I knew how to use them, I created a client and server application that sends a known buffer of data down the socket. This was beneficial because I needed to make sure that the size of the data structure holding the data took the length and data type size into account. I next created a server that opened a sound file and transmitted the data to a client. My receiving data structure was initialized to zero, but soon it became populated with positive and negative integers. I wasn't so if it was my sound data yet because I did not output it to the audio device, only the screen.

I worked some more on the socket code. I made a quick change to the client code so it can connect to a host from the command line input. I use "localhost" to test it out on my iBook and "10.0.2.1" to run it between the iBook and iMac. It's nice using the two computers because the iMac is the host for the iBook's internet connection; this lets it assign the simple address to the itself and the iBook. Much better than the changing IP address for the dial-up connection.

The current sockets are exchanging string data back and forth. I used this to get the sockets working and make sure they get connected. Now that they do, I have integrated my audio file reading code into the server socket application. I am also examining the data structures that I need to put the data in. In my audio file application, I filled a simple buffer with the sound data. Now I need to fit the buffer into an NSData structure before sending it down the socket. From looking at the API, the method for putting the data into the array seems apparent. Unfortunately, I have limited experience with these structures. I'm going to put in the audio device code into the client application and then listen to the results.

Right now, I'm putting my sound writer part of the code on the back burner. I think the socket code is more important. I tried using an AudioFile API provided by Apple because when I asked the mailing list members where to start, they suggested it. The API does look straightforward, like they said, but to use it you also need to use data types from other, older APIs. There's an example for setting up audio output with this API but it's kind of hard to follow. I tried following its example to play a file and only got a repeated, harsh noise. It's difficult to know where the problem is because the AudioFile API does not have a verbose example and the API uses structures from other frameworks that I'm unfamiliar with. The header file has helpful description but that isn't as nice as a walkthrough. I decided to put down that work and stick with the AIFC file reading example because I understand how it functions and it is like the sine generator programs in terms of referring to the HAL. I also examined AudioUnits because a programmer said I should use them instead of the HAL. I guess it's supposed to take care of converting sound to different sample rates and other helpful features. But without an example that explains how everything works, I choose the AIFC code.

I've finished polishing the AIFF player option. Now the program will catch when the user types ^C, clean up, and exit. I put in a software play-through option, too, with ^C capturing. The software play-through is really easy. The microphone's input buffer is simply copied to the output buffer. I'm now working on getting sockets set up. I have set up a socket connection between a client and server application. I'm using a Objective-C wrapper which makes initialization and calls much easier. I need to familiarize myself with the data type used for the buffer (NSMutableData) because I send a text string into the buffer and it seems that the server appends the string data. This causes my test string to have blank data in front of it. I'm going to focus on opening a file and sending it to the socket. I think I'll have the file serving option wait for a client to connect before serving the file. For a little variety, I will implement the microphone server to not wait for a client.

I have begun constructing the command line-based interface for my program. One example of the fun things I've dealt with is getting a line of data instead of just a string because file paths could have spaces. Now that the shell is being made I'm starting to put my code into library-like form. My AIFF playing code used to be in the main function but now they are in their own files and can be called with one function. I've put the code into separate, relevant functions.

I've changed my error reporting to be more helpful. Besides describing what the error is about, the unique error code helps finding where the program crashed easier. Thankfully there haven't been many crashes.

When my program was playing, it would "spin its wheels" while it waited for the end of the sound file to be reached. I examined how much of my processor this was eating up; the program was using whatever time it could get (around 70% to 80%). I added a sleep command to only check for the end of file every second and I was happy to see the utilization drop to 3% or 4%.

I'm also looking at getting socket connections working. There is a Objective-C wrapper that encapsulates BSD sockets that I'm investigating right now.

I have begun collecting information for recording from a microphone and working on setting up a client and server section. Starting from the UNIX book from my operating systems course, I had to find the socket data structures that Mac OS X uses. The book describes sockets from a Sun OS standpoint, while Mac OS is built on FreeBSD. For future reference, the necessary files are in this directory, /System/Library/Frameworks/Kernel.framework/Versions/A/Headers/sys.

I've been working on modifying my sine application to use an outside buffer instead of one inside my IOProc. Right now I have float pointer that I allocate to 8092 address spaces which will hold my sine data and later the AIFF sound data. I can allocate space for it and initialized it to zero to check that it was allocated. My current difficulties come from writing my data the array (which is a global, static variable) and because of that I can't get sound to be outputted. The error is a bad access error. I can reference the array fine from the function that initializes it and I'll try using the array from functions that are passed the array pointer to see if the function can see the array contents.

The walkthrough was a nice wake-up call for me. After walking through the current status of my project, Dr. Pankratz asked my to begin drawing what I was doing. This was helpful because it made me aware that my explanations need to be very explicit because all the topics that are common knowledge to me are unknown or sketchy to my audience. I'll be sure to put this realization to use during my presentation.

I thought my file reader was working but now that I have returned to it, I find that it is not functioning. I'm not sure what the problem is but I'll start checking a few possibilities. It might be that I was handing off file pointers between functions and losing my current position in the file. Otherwise, my buffer may not have the data it needs; I'll check its contents to be sure there's valid data there.

I'm getting ready for my walkthrough presentation by polishing my current applications. I've changed the sine wave program from a C-based command-line program to a Objective-C based application. The earlier program generated a sine wave based on a command line argument and had a constant amplitude. The new application has two sliders for adjusting the frequency and amplitude of the sound. I'll attempt creating a GUI for the file reader too.

I've been able to play a sound from a file but my support is rudimentary. I parse through file to the SSND data chunk and play the sound. I'm going to start working on reading the COMM chunk to get the details of the sound file. The current program takes advantage of the fact that I know all about the sound file.

I've been reading through the UNIX book from my Operating Systems class to get ready for the networking phase of my project.

While I haven't made a sound file play yet, I believe that I'm ready to do it shortly. I encountered a little trouble going through the chunks of the header file. When I would get SSND chunk (the chunk that contains the sound), I would not get 'SSND' but 'SSND\223\324'. Since I was dealing with these chunk headers as strings, the null character wasn't found and that caused a problem. I asked the mailing list and they said the a terminating null character is not required in the chunk so I did comparisons with the data as a four character array. I believe I'm ready to play a sound because I have looked through an example and can follow what is going on. The IOProc takes care of reading the file and placing the data in the buffer. The data also needs to be trimmed to the range of -1 to 1.

I also downloaded the socket code from my Operating Systems course and tried compiling it. I was able to make it compile and create a socket. The bind didn't work because I did not start up the server and the client.

My recent work has been examining the headers of IFF files. I read in and go through the file to see what format it is in and how big it is. I've been working with AIFF and AIFC files so far. I needed to review some C terminology because I was in a C++ mindset.

I usually approached getting sound to play with despair. The example code I had to look at confused me and after many attempts, all I had was a ugly sound coming from the device. When I found Raphael's Sebbe's CoreAudio Example, I was revived because it was exactly what I was looking for: a simple IOProc that creates sine waves. All the work is in the IOProc so it's easy to follow. (That's just what I need.) The method, creating an array for the sine wave data, is also one that Dr. Pankratz mentioned to me. This is a great starting point. I will need to generalize the IOProc for input or output.

More relevant emails that deal with questions Dr. Pankratz and I had: "I assume a 'frame' here must be what I've been calling a 'sound sample'. Is that correct? But then what's a 'packet'? I'm guessing a packet contains one frame for each channel of sound?"

Yes - the packets are in the description structure to deal with encoded

data (like AAC) that has one packet of data for multiple frames (1024).

I needed to start getting sound to play using a function I built myself. My function makes beeps that depend on the frequency I specify. I'll try to get a tone now.

The earlier data structures that mystified me were revealed in the CoreAudioTypes header file. Audio Streams and Audio Devices are detailed.

It seems that AudioUnits aren't suitable for my project. From what I have raed about them, they seem to be used to add effects (i.e., reverb) and can be chained together to do lots of neat stuff.

I have used a more suitable example to get a sound file playing. The application is SoundExample from the Omni Group. The application plays AIFC files. I tried playing AIFF files to see what would happen and only white noise came out. AIFC seems to be the files on an audio CD and I would like to discover the difference between AIFC and AIFF. While the two examples have been beneficial to understanding how to get things working, I need to implement an IOProc myself to feel confident that I know what's going on.

I think I will use aspects of the SoundExample implementation as a demonstration. The application uses an fread call which is a call that can be blocked and cause audio to stutter. James McCartney pointed out the shortcoming and recommended using threads to make the IOProc more efficient.

This page has a diagram showing the relationship between the Application, Audio HAL, Driver (the sample buffers), the DMA engine, and the Audio Device.

I have found the Writing Audio Device Drivers walkthrough. This is the in-depth discussion I neede. I am learning about buffers and the design of the Mac OS X Audio System.

I've gone through more mailing list emails. I've found a real gem: an Objective-C wrapper" for dealing with the C-based, low-level HAL.

Today I found a nice site that describes the header of AIFF files; this is the format my application will read and write.

I read through a lot of emails in the mailing list archive today. Besides putting many bookmarks in my email library, I found Sinewave from SuperCollider and the SoundExample from The OmniGroup. Sinewave changes frequencies, volume, and panning from user-manipulated sliders. The SoundExample is a very simple application for playing playing CDs through CoreAudio. I look forward to investigating both.

Bill's email: "When you're code runs from a context that is provided to your application from Core Audio, it is a fully fledged user level thread."

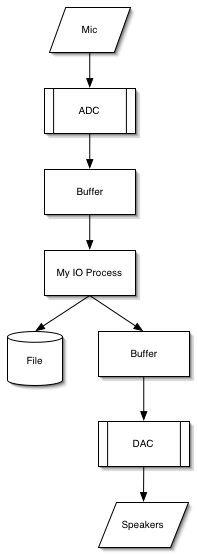

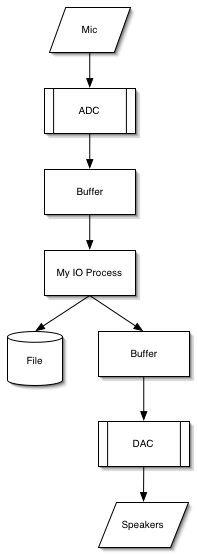

An email from Laurent Cerveau helped me picture the audio path.

I examined the CoreAudio API. The lowest level of the API is the Audio Hardware Abstraction Layer (HAL). There is another layer above the HAL called AudioUnits that I will investigate. From the mailing lists, it seems that I may be spared some of the lower-level complexity but still be able to manipulate sound. I'll set that as a goal for next week.

I have started the interface for my application: there are two buttons: one for making sound and one for stopping sound. I examined the code from Noise, an application from Blackhole Media and used the code to output white noise when the start button is pressed. I now know how to: find the size of the device list, use the size to determine the number of devices attached, find the default output device, tell the device to use my input/output process (C function), and output sound.