- Aldo Gonzalez

5/5/21 Finishing, Presentation, and Defense Prep!

Done:

It's been the most eventful past two weeks of the semester! I put quite a bit of work into finishing my project (or, as a friend says: getting it as good as possible). I also did my presentation and prepared for my defense. I'll start with the finishing part.

After refining the cycle, I went on to think about the issue of the TK window not responding during calculations. It turns out this is a common problem in TK. I wanted to fix it because I wanted to update the screen every x amount of cycles. After much digging regarding threads and processes within Python and TK and talking to Dr. McVey, I realized there wasn't a non-overly-complex solution for this. Threads alone weren't working, according to our own extensive testing as well as Stack posts. And I was too far in the project to try a heavy conceptual-integrity change, such as setting up TK as a separate process. I realized that a button can always call a function, so I changed my code so that the button function runs some number of cycles and then updates the display. This allows for the calculations to finish, the UI to unclog, and then updating.

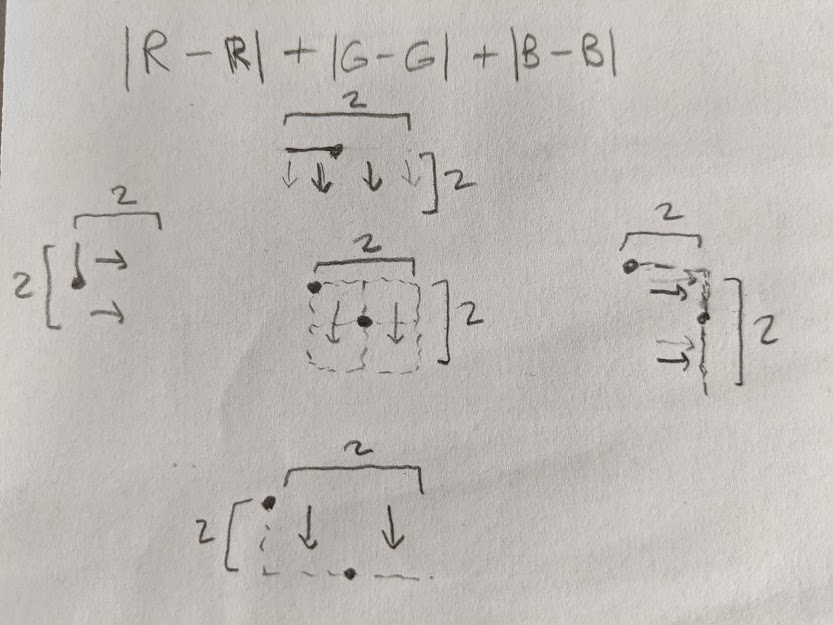

For the fitness function, I updated that to a difference between the RGBs, which is a critical update to allow multi-images. I also changed which pixels I looked for from every pixel to 2x2 packets of pixels (as initially suggested by Dr. McVey). This sped up the single-image runs and would still pick up enough details for multi-image.

For the multi-image cases, this took several days of thinking, testing, and image selection and editing. I found that the fewer colors were in the image (or, at least in the packet area), the better for both the fitness function and the visual appearance. Initially, I had selected dozens of dog images, but this revelation led me to narrow it to around 10. I also had to crop those, usually only leaving faces. This significantly helped my algorithm. After plenty of testing, I found that it works! Of course, for it to resemble the final image at all, you need lots of tiles, which I found took much more time to run (because there are more tiles to put in the best spots). Here is a single-image 100-tile run that took 1 hour. For reference, the 25-tile runs above took 2 minutes!

Here is a multi-image 200-tile run that took 50 minutes:

As you can see, the black and brown parts of the dog got the correct tiles.

And here is a multi-image 1000-tile run that took 10 hours:

You can see similar results there, especially with the black.

I was already amazed that genetic algorithms work at all, so it was thrilling to see that it works even without perfect matches, some distractions, and lots of tiles.

That's when I began to prepare for my presentation, which was on 5/1. I thought that went well. I had successful results to show, and I combined that with accessible explanations of the theory and my implementation.

After that, I made some UI additions to prepare for my defense. The major one was input boxes so you can easily select parameters. I pre-defined what I've found to be the best parameters for single-image runs. Another benefit of that is that it gives the user an example of what format the numbers should be in. I also did some color, button, and layout changes for a nicer look.

And today, I realized something incredible (and I thought I was done being impressed!). Take a look at how the two earlier multi-image test runs look when smaller:

They really look like the dog! Wow!