|

This week was PRESENTATION WEEK. I presented on Wednesday and felt that I did a good job. My (very wonderful) friend volunteered to help in my demonstration, which I appreciate (and so should everyone else since I didn't need to find an assistant in a The Price Is Right-style with the audience). Now, I am preparing for my defense and getting my website done.

I've really enjoyed how this project went throughout the semester for me. Even though I was very anxious and apprehensive at the beginning of the semester, I feel that, by hitting the ground running on this program, I was able to accomplish a lot and managed to avoid a lot of stress with this project. I enjoyed the problems I worked through and understanding the logic of the Kinect. I am happy with what I have accomplished this semester and am glad that it all came together. This past week has been good for wrapping up the lose ends with this program and to begin preparing my presentation for Wednesday. I had my friend play around with this program to see where I could make it more user-friendly as well as sort out a major error that I stupidly overlooked.

To make my program more user-friendly, I modified the directions for Bust A Move to change the skeleton from orange to purple when a user locks in to record a dance so that they can visually see when to start moving. This is a major improvement from the flash of green circles and prevents the user from having to read anything from afar. It's a very noticeable change. My friend was very useful in creating recorded dances for the program so that, for my presentation, actual dance moves are displayed (not just the simple arm waving motions I had been testing my program with). I had an issue where sometimes Routines and Partner Up would crash randomly after trying to run them multiple times. Upon debugging, I noticed that an array counter I had established was not being set back to 0 every time these methods were called. So, that was a humbling point in the week. This week, I will be presenting. My tasks for this week is finalizing my presentation, teaching my friend (who will be part of my demonstration) what she will be doing, recording a back-up demo video, and triple-checking everything to make sure I am ready for Wednesday. I've spent the past week ironing out certain details within my program, since all of my requirements are now met. Now, I am refining the application. One of the biggest situations has been handling how to prevent a second skeleton from being drawn on screen while a user is being tracked. (Turns out, you can't do this when the user can be leaving and re-entering the frame since the Kinect registers each person as their own Skeleton with their own TrackingID that gets trashed once that skeleton leaves the frame). So, in my instruction manual (which I am currently working on), I will be making a note that in Bust A Move, only ONE user can be in front of the Kinect. This isn't exactly ideal since I know that will not stop users from trying. A Kinect can only display at most 2 skeletons at a time, so I will not be giving this warning for Freestyle since it only truly becomes an issue once a user is recording a move.

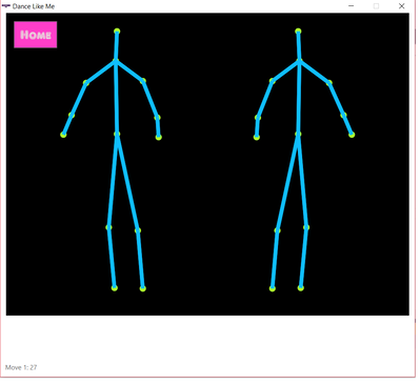

I've been making slight modifications to my program to refine it, including adding hover-based instructions for the users (via a ToolTip in WPF). I also tested my program on a lab machine, so I will be doing that later this week again once I have everything sorted out. My biggest goal for this week is getting the circles in Bust A Move to have a more accurate range and to collect actual dance moves for the demonstration (right now, most of them consist of me waving my arms around, nothing like the Macarena or YMCA). I don't doubt that I will be able to find people who are willing to record moves for this. It's been a pretty solid week for this project, overall. I've decided that a Routine will consist of 3 randomly selected dance moves and those will play for the user ( a kill-switch is in place if the user is bored by the randomizing). I also figured out how I will do the partner routines for my 4th requirement, where I have the skeleton on the right mirroring the one on the left (see below). Partner routines (under Partner Up) are constructed the same way as Routines, where 3 moves are randomly selected to dance to. So, I have working components for all of my requirements now.

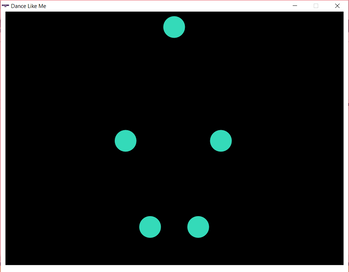

The biggest goals for this week are improving Bust A Move's coordinate range for the 5 circles to lock in so that it's easier for users to initiate and end a move (currently Bust A Move works, but sometimes the positions are fidgety, so I'm trying to improve that) and eliminating certain smaller issues. One of these smaller issues is preventing a second skeleton from being drawn to the screen (NOTE: the most a Kinect will pick up on is 2) and handling when the Kinect is unplugged during execution (I handle when no Kinect is plugged in at initial execution, but not during). These are finicky details that will require a lot of situational testing, but I am hopeful in resolving these issues. I will also have some of my non-CS friends test this game to see what questions/concerns/issues/ideas they have. So, this past week has been a bit more sluggish than I anticipated, but I still have made some significant progress with my capstone and have an idea of my goals for this week. I finished getting the remaining "lock in" points for Bust A Move so that the head, hands, and feet can be lined up with by a user and, once all 5 dots are highlighted, they turn green to indicate that the user is now being recorded. These dots are subject to being moved, but they work well right now (See attached picture for how they look sans skeleton). The main struggle that I have with these dots is that I am trying to figure out where they are on the Kinect's coordinate plane (which is 3 planes, X, Y, Z) when I had to place them on the WPF image (which is the standard 640 by 480). This is difficult to do in real-time with one person - fortunately, I can find volunteers who are willing to stand in front of the camera while I track coordinates.

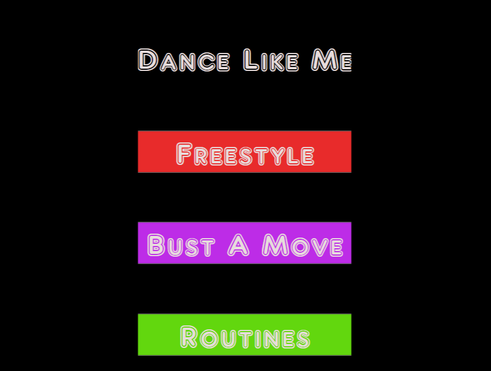

This week, I want to finalize the Routine option, where the program will randomly pull 3 dance moves that have been saved and perform those moves in a routine (potential idea: having a pre-programmed "Bow" dance that is attached to the end of the Routines to signal the end?). As a design choice and, in accordance with the 3rd Requirement for my project, the computer, NOT the user, will be constructing the routines. I believe that this will be fairly straightforward to implement overall and I have ideas for how I will be doing the data structures to reduce code redundancy (as I am trying to avoid 13 individual segments to read in the data and instead considering an array of lists so that this could be accomplished in a for loop[) so I'm not concerned about this step. The biggest issue with playing back a routine is the flashing, almost nausea-inducing, strobe-light effect that redrawing the skeleton provides. I will be looking into a potential way to reduce this, but I am skeptical. The only other main concern of mine relate to the 4th Requirement for this project which requests a dance partner for the computer; I'm uncertain of how realistic this step will be and how I can coordinate a partner without (maybe) having two skeletons each doing their own routines on the same screen together, but then they would most likely overlap and you would only see one (since the skeletons share the same starting points and the area isn't big). I just don't know how feasible this is with the Kinect environment and the coordinate plane. Hopefully, I will be able to ask either Dr. McVey or Dr. Pankratz this week about this requirement. So, I did not work on my capstone during spring break. This was intentional as I set goals to have most of the conceptual work for this project done before I left campus the Friday before spring break started. I knew I would not be working on this project while in Myrtle Beach, so I planned accordingly. Now for the goals of this week:

I am in the first time slot for walk-throughs this Tuesday, so I intend on having an .exe that I can run and show my peers where I am with my project as well as receive feedback. Therefore, I need to slightly improve the usability and functionality of my work to the extent that a demo is feasible (so, including back buttons and adjusting the starting/stopping dots for Bust A Move). This week, I also want to get a better idea of how the computer will construct routines for the dance moves. Since I can now draw skeletons to the screen, it is now a matter of deciding whether routines will be randomized, limited to a set number of moves, allow a user to create routines themselves, etc. This is something I may want feedback from my peers on. I also want to figure out the placement of the starting/stopping dots for Bust A Move if possible this week, depending on what I decide for Routines. This has been a BIG week in terms of accomplishing goals. As discussed in previous blogs, I wanted to be able to redraw collected points to a screen before I went on spring break so that I can tackle more specific problems in the second half of the semester. Just today, I managed to solve the problem that has been plaguing me for the past TWO WEEKS; I can successfully redraw points to the screen in the time that they were captured (each dot getting 100 milliseconds of screen time) as well as being able to connect those points to look like bones. Needless to say, today has been huge. The biggest issue was fiddling around with the DrawingGroup and DrawingContext of the program and realizing that, once the DrawingGroup has been Opened(), it should NOT be reopened - instead, it should be Appended(). So that was fun. The main focus now with redrawing is smoothing out the image in a way that makes the redrawings seem less nauseating (think of how strobe lights flash, the appearance now is quite similar, which is not ideal) as well as determining how routines will be constructed (randomized, user decides, etc). But, for now, having this part completed is significant.

While I was attempting to solve this major issue, I was working through other tasks, such as making sure that the rest of the application was working well. This includes providing back buttons for users for Freestyle mode as well as a start button and and end screen for Bust A Move (see one of these screens below). I also managed to eliminate the scaling issue I had by adding a characteristic to my Window in my xaml file: ResizeMode="NoResize" will prevent users from manually adjusting screen size. When I get back from my spring break, I intend on solving other issues and handling other tasks that make my program more user-friendly, as opposed to merely satisfying requirements. My To-Do list is ever-growing, but it's helping me think through the various tasks to make this project as good as it can be, This past week has had significantly less accomplishments than the previous one. I tried throughout the week to get the dots from one joint to draw across the screen in a timed fashion (one appearing approximately every second so I could track its progress - that time will be shorter in the completed application). I have still not had success with this. I spoke with Dr. McVey this past week about it and her solution should work well as nearly all of the components for a timer are working, except I cannot get anything drawn on the screen right now. Hopefully, I just have a minor error that I can resolve after meeting with her.

The biggest goal for this week is the same as last week: getting a skeleton to replicate a saved routine across the screen with the correct timing. I want to go into spring break having the basic concepts of this project done so that I can further expand after break (including: stopping Bust a Move and returning to the home screen after one dance is recorded, figuring out how I would like routines to be generated, etc). Based on Dr. McVey's feedback, I will be avoiding using DrawingContext objects, which is what the Kinect's code promotes, to draw ellipses on screen. The only major issue with this (that I can think of) is the fact that I then am not using the Kinect's methods for drawing skeletons, but the Graphics class in C# should work just as well. This past week has been pretty exciting for getting a dance move to be recorded. I can currently have a player "lock in" to the game to start dance moves, perform that move, "lock out," and that move is saved. I am currently tracking 13 joints on a skeleton and saving every third data point. I am using a list of strings to store this data in text files and writing it to the text files. The goal for this week is to try to redraw these points on screen in the same time span that they were collected. (Currently, when redrawing these points on the screen, they only last for less than a second.) To be able to redraw these moves on screen would be the next major step of this project to have it assemble the routines.

One issue that I am struggling with is trying to scale the screen better - I realize that, even though I get to test the UI using the monitors in the lab, a typical user only has their laptop screen and I want this to be as visually accessible as possible. I want the user to not have to read from the distance that they need to stand from the Kinect - instructions need to be provided before the user starts dancing. I want everything locked in to fixed dimensions, so I need to determine what size the screen will be. XAML files are definitely an interesting challenge in trying to create a UI. This week has been focused on small details within the program that will make the whole thing run better. One major advancement from this week was getting the structure to store the points for the dance moves: List<SkeletonPoint>. I have experimented with this list structure to see how much data it can store and it will last through (at least) a 45 second dance move (which I would think that a user wouldn't need more than 15 seconds, but it's good to be prepared). This will be huge for the project and I intend on being able to work with recording dance moves this week. The goal is to record a sequence for all 13 joints I am tracking (which is reduced from 20) and then work with the playback. I have a pseudo-code/loose design for how I want to achieve the playback (via creating my own frames and displaying them).

Another huge success from this week was getting two circles to appear on the screen that change color when a user places their hands in these circles. I am still working out the exact coordinates needed, but I am using this idea to allow a user to tell the computer that they have started the move and line back up with these points later to end the move. This idea will work into this week's goal as I attempt to try and fix WHEN the data for each joint is stored. There are, of course, random challenges that I need to confront and will hopefully be solving within the next two weeks. The biggest challenge is fixing the size of the .exe window in Visual Studios so that I don't have to alter the center point for every element in my UI (which is proving to be difficult with the ellipses). The other challenge is the distance from the Kinect that a user needs to be in an ideal situation: I don't want them to be further than 6 feet from the Kinect, but that skeleton is rather large and close to the boundary lines of the application. This past week has been about experimenting with the Kinect more and working towards coordinate tracking. I have a better grasp of how the code from the Developer Toolkit works, which is great since I am using its code for extracting data from the sensor and how it is using that data to construct a skeletal figure. I will be meeting with Dr. McVey this week to address the biggest concern with recreating these dance moves: storing all of this data. What I have found is that the Kinect tracks each of the 20 joints of the human body at a rate of 30 frames per second, with each joint being processed on a 3-coordinate plane. This results in thousands of data points that may need to be recorded for a dance move to be learned, with the order of each of these points needing to be maintained.

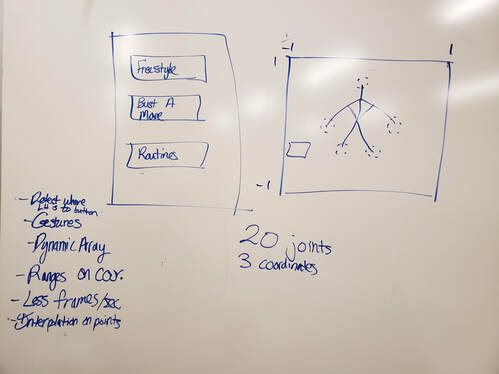

During the mini poster/white board presentations this week, I raised this concern and received substantial feedback on how I may go about handling this issue (including a queue, dynamic array, and through interpolation). I also raised concerns about the fact that I was trying to make the hands on the skeletal figure act as "cursors" within the game. It was brought to my attention (and it is bizarre to me as to how I completely ignored this option) to instead focus on just tracking when that joint is within the area of a button to select an option instead of going through the extensive process (which involves a minimum of 6 classes that I would need to create in a separate library), I could just track a joint's position. Overall, this mini presentation was very helpful. Below I've attached a photo (left) of my whiteboard (plus notes) in regards to ideas that I received. I have also started constructing my UI (right) for this project. It's not done, but this is the basis for the home screen of the program. This week has been focused on getting the Kinect up and running. After getting the Kinect for Windows (not XBox, which was a whole 'nother situation), I was able to set up the Kinect for my computer. One of the biggest issues was realizing that I had a Kinect Version 1, not Version 2, which resulted in me downloading the wrong drivers, which was fun. After it was all set up, I was able to use the Kinect Developer Toolkit Browser, which had the SkeletonBasics WPF app that I could download and run. This app can be run through Visual Studio and I had access to all of the code used to create this app. I am currently experimenting with this code in an attempt to understand it. There were two skeletal tracking apps with the Developer Toolkit, with the first one being shaky and inaccurate while the second one is far more stable. Unfortunately, the worse skeletal tracker is in C++ (which I am familiar with) while the better tracker is in C# (which I am NOT familiar with). So, in addition to understanding the Kinect's code, I need to understand C#.

I have also been reading Beginning Kinect Programming with the Microsoft Kinect SDK by Jarrett Webb and James Ashley so that I can better understand the range of what the Kinect's skeletal tracking can do. The more I read, the more confident I feel in choosing the Kinect as the basis for this project. There's a lot of detail regarding the Skeleton and Joint classes as well as sample code. I am also realizing that my biggest hurdle for this project is getting the Kinect to save dance moves and play them back. I should be able to work with the Position property of the joints to do this, but storing this data will be a challenge. Currently, my focus is understanding how the coordinates are recorded for skeletal tracking. Once I understand that, I believe I will have a strong basis for moving onto the biggest hurdle. Below is what the Kinect Skeletal tracking looks like from the Developer Toolkit. The fact that I can access this is pretty incredible to me and puts me at a decent starting place for this project. Over the past couple of days, I have been looking into more ways that a laptop camera could detect motion and attempt to follow specific objects (such as a hand) across the screen. There are a few options outside of the Kinect (though some of which were modeled after the Microsoft product), including OpenPose as well as non-open source projects like Skeletron that utilized a webcam, TensorFlow (the Google library), and Unity game engine. There have also been smaller attempts at having a webcam track objects, but never a full body. OpenPose looks particularly promising, but difficult to set up. I would still like to experiment with the Kinect. Currently, I am having a hard time trying to determine where to start in terms of programming and setting up a system. I feel like the best area to start with is figuring out what I am allowed to/can use to track a body moving across the screen and emulate that in a stick figure animation (or something equivalent). Hopefully, I will be able to meet with Dr. McVey or Dr. Pankratz this week to determine the best course of action.

One of the first things I began doing for my project is trying to outline the very basic tasks required for Dance Like Me. This evolved into a list of questions/personal tasks, such as "How will the computer saved dance moves it has already learned?", "Decide how player initialization will work. Wristbands? Skeletal frame to match up with?", and "Decide how motion detection will work - coordinates, matching frames, etc?" One of the biggest successes of the past week was learning that there exists a Kinect for Windows computer that can be programmed in Visual Studio. This is particularly useful for me because my project will share similarities with how the XBOX Kinect works and how a player is mimicked on screen.

I will be asking my instructors is we have a Kinect specifically for Windows so that I can begin working with it (assuming I am allowed to use the Kinect camera). I have been researching how the Kinect operates in order to initialize and detect motion. I have also researched how a laptop camera could do this, but I'm unsure how I could specifically capture movements and initialize based on this camera. If I am able to use the Kinect and code in Visual Studio, I began researching how I could create graphics for an executable file (the Graphics class, if you can believe it). I am less concerned with tasks such as generating routines and creating a dance partner at the current moment. I would rather focus on the basic foundations of this program for the time being. |