Lab Bot (Crab Bot)

The goal of this project is to provide a way for a user to control the lab bot, either directly or indirectly. A user may want to drive the robot themselves, or provide the bot with a destination. The robot should be able to prevent itself from crashing into unexpected obstacles, and still complete its tasks.

Project Requirements:

-The bot should understand its environment and know the location of its contents, probably using a modified geo-mesh to map the lab layout. ✔

-Users can instruct the robot much like an rc vehicle. The bot responds as long as it is safe to do so. ✔

-Users direct the mascot to move toward a target while avoiding obstacles. ✔

-The lab robot should avoid obstacles that appear unexpectedly.

-Of course, many students will want to be the bot’s guide. The controller should be able to queue requests using an appropriate scheduler ❌

-Potentially a camera ❌ (Unsure if able to fully implement)

-Investigate sensors and components that will help the bot achieve your goals. ✔

-The bot needs a name and a personality/attitude. ✔

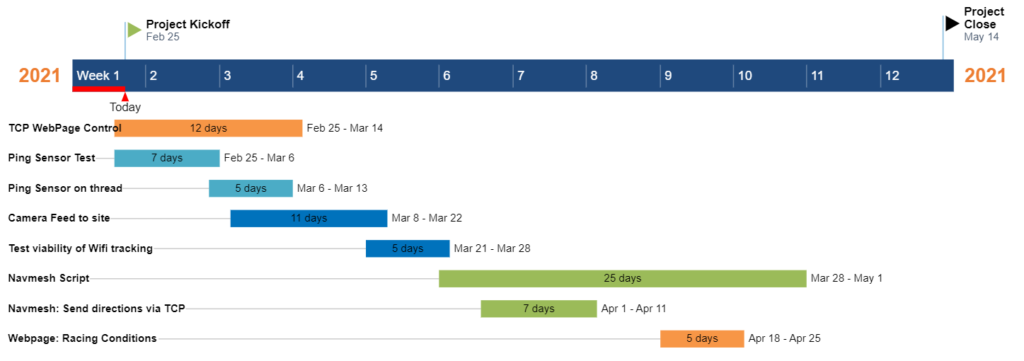

2/6/2021

After messing around for a few days and being unable to send code/data to the bot via the usb cable, I consulted Nathan Labott’s Initial Setup Guide that he had posted on his Lab Bot site. The guide detailed how to connect to the bot via the WX Wifi module, and send code and data back and forth through that. This worked, and I have set up initial hardcoded movement, as well as direct control interfacing from the connected computer’s keyboard, thanks to a tutorial from the Parallax Site. I have accomplished very little so far, but now that things a re functional, I can move on to planning and developing ideas for the future of the project. For the rest of today, I just want to familiarize myself with the bot’s coding enviroment and included libraries, and how the data is sent. Ideally, I would like the control to be handled through some kind of web interface. In years past, this was a basic website, but I have considered attatching it to a Discord Server, a sort of chatroom/skype application. My final goal would allow users to input commands for the bot’s movement, as well as a live feed from the bot. Parallax has a camera kit that they sell, I will need to speak with McVey to see if SNC already has one of these, if we could purchase one, or set up something of our own. Another requirement listed for the project is for the bot to be able to ‘finding a target while avoiding fixed obstacles.’ The bot already has sonic sensors that I believe I can set to act as obstacle avoidance, and I saw on Parallax’s website, a tutorial for having the bot path to an IR beacon. This may prove useful for that requirement.

2/21/2021

2 weeks since my last update. I have been pretty busy as of late. I have been trying to chase down some long-term design leads of Outside-In tracking. My current plan is wifi based tracking, I have found a paper on tracking devices using signal strength from several access points. I am not sure if this will work, and my backup is to use an external script and wheel-based tracking. This is not the best option, but it may have to do.

On the manual control aspect. I have successfully gotten a webpage to host on the robot, and use a slider to set the speed of the bot, from 0 – 64. This is the first step to setting up the manual control from a website. In the future I would like to capture keyboard input when accessing the page, and handling racing conditions.

Paper on Wifi Tracking:

https://dc.ewu.edu/cgi/viewcontent.cgi?article=1080&context=theses

2/25/2021

Looked into TCP connection over the bot’s wifi module. It is possible, but implementation will take more work. HTTP GET/POST doesn’t seem to work when connecting to a web server hosted on my laptop. I am going to look into writing the webpage and using TCP to send the movement instructions to the bot directly. This will take some time, since I am very unfamiliar with the languages that this can be done in.

3/8/2021

I’ve been taking a break from working on TCP while Pankratz and McVey look into some solutions. In the meantime, I have been attempting to get the ping sensor to work and give a response. I have run into some issues, as the drive functionality requires a special terminal to be opened, and I haven’t been able to get the ping sensor to give back good data on this terminal. PING works fine if the bot is stationary and running code unrelated to the drive functionality. I haven’t posted any updates here since last time since I’ve had nothing to show for it, but hopefully that will change soon. I am tired of spinning my wheels on this. (Pun intended).

3/28/2021

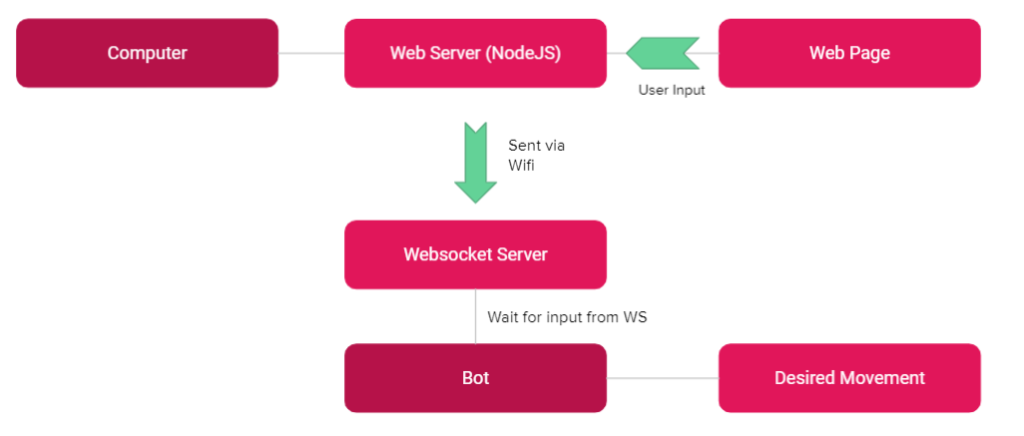

Progress, finally. I’ve settles on websockets as my communication mode between the bot and the external program to handle input for the bot. Websockets are unique in that they do not require a call/response between the client and server. with this connection type, the bot and the host can independently send data, provided the other is set up to receive the data. Thanks to DCP for helping me get started with some setup.

I’ve gotten a very rudimentary websocket connection set up between the bot and the host code. Next up is to clean that up and ensure I have a full understanding, and then on to the autonomous mode. I am using Javascript and NodeJS for this, which has proved challenging to learn.

3/30/2021

Updated progress: I was finally able to get the program running on Node.js, hosted off my laptop, and able to make a websocket connection to the bot and send it movement instructions. I ran into lots of dead ends and lots of errors, but it works now. Next up is to clean up the html page that the user sees, and to make the speed scroller work. I also have an issue where if the server and bot are not started at the same time, the websocket will not handshake and further connection is impossible. I need to add in checks to attempt reestablishment of connection. Once that is complete, I will move on to getting the autonomous mode running.

4/19/2021

Its been quite a while since my last update, but I now have progress to show for it. after many long nights, the robot can successfully do automated, canned movement. Given a relative x, y position, the robot will rotate to point the right way, and then move to the destination. I can tell you about 500 different ways how to not accurately record position and orientation, and 1 way to do it correctly. I am currently working on the algorithm for the robot to to redirect around obstacles successfully, as it currently causes the bot to attempt to move around the object, and freak out. I suspect a recursion issue. 10 days until presentations, I must solve this issue, and attach an extra sensor, as well as try and get a speaker working with it.

4/22/2021

Good progress since last update. I discovered a lot of bugs with the automated system that I have fixed. The redirection algorithm, along with an accuracy algorithm are in place, so the bot will stop trying if it gets close enough, due to the limited angle accuracy I can get with the wheels. In implementing this, there is a bug somewhere in the automation function that has to do with the bot going into the negative y range and it freaking out. Currently, this is stopping the redirection from working fully. The bot can avoid obstacles that it comes across, it just cannot continue to go where it was trying to go.

I would like to avoid all of this, but in order for the bot to be able to resume its travel to a previously declared position, it needs to know where it is relative to things. Hopefully I will have this sorted out in the next day or 2.

4/23/2021

The redirection algorithm is fully functional and in place. Though some bad runs occur(due to, what I am assuming is loss of input data due to using a web connection), it does work. I will include a video of it working in my presentation, if the system decides to not work on presentation day.

I also got the Emic 2 Text-to-Speech module working, which was no small task as there was no setup documentation that was usable. Planned implementation of this module is:

- Audio to play when obstacle detected

- Pre-set phrases that a user can have the bot say on command

- Possibly a text box for users to send text