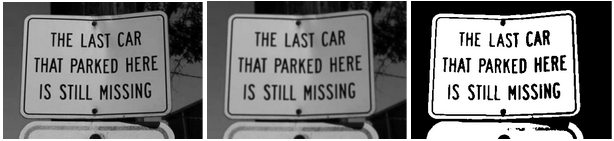

The picture above is showing the first step that I am taking in reading text out of images. This step is to work on the image sanitization so that the algorithm that is responsible for reading the text has the best chance of of reading the text. This is generally done the same way from what I have found. It starts by turning all of the pictures into greyscale then the image is blurred and is finally thresholded. Greyscaling the image is probably the minimum that must be done since it takes out of the equation all of the color posibilities and limits it to black, white, or some grey imbetween. Another veryimportant step is to threshold images which takes a threshold value and if the pixel value is greater it will make the pixels pure white and if it is less then the pixel will become pitch black. This is another good step since now the algorithm only will need to concentrate on two colors instead of a gradient of colors.

After getting the data sanitization portion of code completed, I started looking into ways in which I can crop out the individual sign from the image as a whole. This is currently working pretty well but I need to remove the box that is surounding the whole image since it really does no good. I am still currently working on getting Tesseract installed so that I will be able to test how well the text detection work with openCV or if it works best on its own. Once I have the text detection working on my computer I will then start to figure out how I would like to make this run in an android application.

The picture above show the current capabilities of my project. It can detect where text may be located within a picture and it will then draw boxes around the text dependent on if it is at lease 60 percent cofident that it is text. The second function of my project is it will then output the text that it read into the console. The next step that I will be taking to progress my project forward is getting it to function smoothly with video since there is a decent amount of processing that needs to be done and test how well it is actually functioning with different test cases.

This week and at the end of last week I have been working on making a user interface for opening pictures and applying the OCR algorthm to the picture that is then opened. I used Tkinter library in python to create this user interface but didn't relize that when opening the image that the format in which it was being stored would be different to what I have previously been using after some research I found that I needed to convert the data into an array to apply my alogrithm to it. Once my algorithm has run on the picture it then needs to be converted back so that it can be displayed using the Tkinter library. Another issue I ran into was that the images opened and displayed using Tkinter use BGR instead of RGB which was easy to fix using a build in function. The slider bar at the bottom is there for the user to adjust the percent cofidence of the OCR algorithm. I am still trying to get it to work right now but I want to look into Tkinter to see if there is some sort of click listner similar to android so that the values being adjusted will change automatically.

From my last post I have got the confidence slide bar to work in real time so it adjust what confidence threshold is being used when going through the OCR algorithm. Another improvement that I needed to make was to unifomly scale the images while keeping the aspect ratio the same which can easily be accomplished using some basic algebra. The next steps I plan on making are to allow the user to select weather or not they want to use the camera or just open an image up and I will also be looking into how to improve the speed of my program since it is a bit slugish when changing the confidence percentage.

The next steps I have made in my program is adding a text box that displays the text found with the given confidence percentage with the tesseract algorithm. Another improvement that I made was hacing uniform scaling with the images after importing. I gave them a set hieght and the width changes depending on what the aspect ratio of the image is. An additional benifit I found in doing this is the increase in processing speed since there is less data to process. The final imporvement I made was adding a inversion button that will invert the color to test if the results can be improved or not. I still need to implement a button that can switch between live feed from a webcam and opening images. I would also like to mess around with slowing down the frame rate and decreasing the resolution of the video feed.

This week I worked on the implementation of the camera into my project. It went smoother than I was expecting it to however I found some information on Tkinter which made the processes easier. I am now able to switch from the camera and openable photos on the fly dust by clicking a button. The camera also already displays what it has read since I am making the same function call, but it is instead called at a set interval of time. The other part that I worked on was fixing the invert button so that it stays working until the button is pressed again also the open image button is disabled when the camera is being used to prevent user error. Something I am thinking of implementing next is away to essentially take a picture or freeze the camera since the only way to do that now is to switch back to the photos and cancel opening a photo.

I implemented the ability to search the results that were found in the image as a google search and also made it possible to freeze a single frame when in the Live-Video mode so that the user is not required to stay completely still while using the program. Another part that I implemented this week was the pop-up box when the mouse hovers over a certain part of the application with a description of what it is or does. I also altered some of the names on the buttons to make it more clear for the user. The last thing I am considering implementing is the ability to have a license plate reader function that starts at the center of the image and gradually gets larger till the result is of the correct format for a license plate.