|

It's been a crazy couple of weeks guys.

So, I did my presentation about a week and a half ago now! It went pretty well, and I feel prepared for my defense on Monday. However, if you are a current senior capstone student reading this blog, I want to really, really hammer this message in... MAKE BACKUPS, AND BE PREPARED FOR TECHNOLOGY FAILURES. EVEN IN THE LAST WEEK. My laptop that I had been doing my capstone on for the entire semester thought that a good parting present for me would be to crash its hard drive. It can no longer boot up, and it can't find the disk to reinstall the OS so I can do something to save it. It's going to need a new part once I go home. However, I had just uploaded source code to my DropBox and my website. All that was missing was some recent documentation that I had made which, thankfully, I had just printed. I also happen to have a second laptop (which my friends had always made fun of me for but I turned out to be the real winner here). Unintentionally, I will be showing how nice my application works on multiple platforms (Mac and Windows), but I will be just fine. Just be prepared. If that's the message that I leave you with from this entire capstone experience, I think that it is a good one. You never truly know when technology is going to bite the dust, and it's good to have backups for backups. Even if you don't have a second laptop on hand like I do, be ready to have to use another one. Be familiar with how to set up your project and be confident in that process. With that, I think I can close out this blog. I've enjoyed getting to blog for a semester, and hopefully my posts provided a bit of insight into how I proceeded through this project. Take it step by step, and you can get something accomplished!

0 Comments

It's here. It's hard to believe that the week that I've been waiting for and dreading since the end of my freshman year when I realized these projects exist has finally arrived. The presentation is done, and ready to go. I'm actually presenting twice this week: first on Wednesday for the capstone and again on Thursday for my team at work. I don't get terribly nervous about presenting, so that part is not a huge concern to me. The fact that my project needs to be done, though, is. Honestly, my project is done, aside from making my front page look just a little nicer. That'll just be some CSS. I got the actual UI bugs worked out and the algorithm, though simple and not as refined as I would like, is done and in place. I have plenty of things I can talk about at the presentation for how that can be further refined, and I think that my project could be a good stepping off point for someone in the future. If you're reading this for your own capstone project, trust me, I have suggestions for how to improve this.

I need to work on documenting this week as well as possibly make a start on getting the files I need for my binder all together. I will also need to acquire a flash drive (and not lose it like I do so well). Though I am in a good spot with documenting, I do wish that I had documented while I was writing the code. That is one of my weaknesses, and I always regret that at the end of a long project... don't be like me, kids. I'm excited to show off what I have learned and accomplished this semester, as well as talk about the things that can still be done with my project. I'm also looking forward to hearing about everyone else's projects. I'm going to wrap up this blog post here because I'm about ready to crash. This week is going to be insanely busy. I am hoping to make it through roughly as sane as I am now. It sure is panic inducing to be a week and a half away from your presentation and realize that the algorithm you had might not work very well. After Dr. McVey mentioned to me that it was possible the factor was causing overflow in the output wav file, I began to realize that the pitch shifts the algorithm called for were too dramatic. This was causing the distortion and the "screams". So, it was back to the drawing board for something a bit more subtle.

Since I'm home for Easter, I decided to discuss my project with my dad again. He suggested another idea for an algorithm. Rather than try to match the voice to the pitch of the music file exactly, he recommended finding the average pitch of the whole music file, and determining a scale of factors around that. That way, I wouldn't be pitching by extreme factors that distort the voice horribly, but the voice would still follow the flow of the music. I really like the idea, and I already have a basic proof of concept down if all else fails. I have lots of proofs of concept, with varying degrees of success. This just happens to be my favorite one. I'm hoping to have the algorithm solid by Thursday. That's going to be my "stop coding, get cleaning and documenting" cut-off point. Some small UI fixes need to be done, but I'm not terribly concerned about those. I worked on my presentation this weekend, so that should just need some editing work in about a week. Most of my time this week will be devoted to getting this new algorithm in place, documenting code, and preparing some examples of all my tests that people can enjoy at the demonstration. This project has definitely been through a few stages. I swear not all of the wav files I demo will sound like screeching. Hoo, boy. If you want to hear some demonic sounding .wav files that will make you question what I have been doing for the past week, I have some stuff you should listen to. I was hoping to have the first version of my pitching algorithm successfully implemented by today, but if there is one constant in computer science it is that roadblocks are everywhere. What I thought would be a simple joining of the pieces turned out to be a bit of a nightmare.

I believe what is going wrong is that I'm calculating the pitching factor incorrectly. It appears that it is not as simple as dividing the music pitch by the voice pitch and hoping that what you get will actually change the voice pitch to the music pitch. Frequencies do not work like that. I need to do a bit more research to figure out how exactly I should be calculating this factor. It also seems that my append function is leaving out some of the wav files (about half). In the transition to getting this to work with a temporary directory and such, I'm guessing that I messed up some code somewhere in there. As with the previous issue I had with this method (boy, this method is causing me some pain), I imagine some quick debugging will give me insight to what is happening. So, I'm definitely not quite where I wanted to be. However, when I was debugging earlier with constant factors and factors that changed (but weren't determined by an incorrect calculation), everything else seems to be going right. I believe that these remaining two issues are the hump I need to get over before I can come up with some fun stuff to play around with the algorithm and get it ready to demo. I'm going to continue to work on this tonight and see if I can learn anything about this mystical factor. If all else fails, I do have an app that is assured to make your computer seem 100% possessed. I'll take the small victories. It's crazy to think that in just a little over a month, classes will be done! That means that there's less than a month left for me to finish this project! I've been thankful these past couple weeks for the work that I did at the beginning of the semester. I am fairly content with where my project is at right now. The pieces are all in place for me to attempt a first shot at pitching a voice file to a wave file, though we will see if this actually works. Once I try doing it, though, I can debug. My goal is to have a successful first attempt at the algorithm done by the end of the week.

Once I have that first attempt figured out, I do want to work on a better second attempt. Having second long .wav files versus even quarter-second long .wav files seems really coarse, and it definitely won't sound amazing. Honestly, though, I may wait to do that until after I fix up the UI on my file loading screen (which should not take long but I want it to be higher priority) and clean up some of the code around my first attempt (there's some messy stuff in there). I'm hoping that I'll be able to get two attempts in there so I can have a pitched voice file that sounds a bit better. I think it is feasible, I just can't let myself slide. Ideally, it would be nice to get some editor functions working just to make the editor a little more fun, but that is definitely low priority. I'm aiming for something that works, and I think I'm just about there! This week I made some progress on the beginnings of pitching a voice file to a music file. I'm starting with a practice Java program, so it's not incorporated into the main project quite yet. However, I now have a program that is able to iterate through a directory and find all of the .wav files and append them together. It isn't working quite right yet, as it seems to compound the .wav files (for example, it will add clip 1, then clips 1 and 2, then clips 1, 2, and 3) and ultimately I get a 40 minute long file rather than a something that should be about 10 minutes. I haven't done much debugging on this yet, though, so I'm hoping it will be easy to fix once I actually look into it.

I also made a method that will split a .wav file up into a bunch of second long segments and place the files in a common directory, so I can later iterate through them and piece them back together. I will need to adjust my pitching process as right now it uses a different sample rate than 1 second, but this shouldn't be hard. I'm having a difficult time getting the Java to split the .wav file down into segments that are shorter than a second. Once I get a first attempt, I'll play around with this more... I'm honestly not terribly concerned at this point. Overall, with a month out until presentations, I believe that I am in a good spot. At the very least, I will have an attempt at pitching a voice file to a music file and the UI fixed up. I'm hoping to have multiple attempts of pitching that will show varying degrees of success, because as of right now I have no clue what will work best. It's just cool to think that I'll have something done, whether it is pretty or not I didn't do a blog post last week because it was the start of spring break. Also, since I was getting ready to do a lot over spring break with my project, I figured that it would be best to wait and share a lot more updates afterwards! Thanks to some help from my dad, spring break was actually rather productive. This week I actually have wav files being uploaded with Java to a TomCat server, and I am able to retrieve these files and pull them down into the playlist. It looks like I have done absolutely nothing. I really haven't progressed very far, have I? One can take a look at this and say, "well, Caitlin, you were already uploading files to your playlist. Why are you so excited that you're still doing that?"

Easy. Now it's in Java. What I have at this point is almost the final project, or at least as final as I want to take the project this semester. With what I have in place now in the backend, I will be able to easily implement a pitching algorithm on the voice file based on the given music file and place that into the playlist instead. I was able to discuss with my dad some options for my pitching algorithm. I mentioned that I only know how to pitch a wav file based on a constant factor. I also told him that if I keep the sample rate the same, the time markers that are sampled in a wav file are consistent. He recommended looking into splitting a wav file into multiple wav files as a brute force approach that could later be refined. If I know the pitch I want at individual points, having a short wav file to pitch would allow me to use the constant factor. Then I can stitch these wav files back together to form an output. Obviously, this is inefficient, but if I can have this working it would be a start to finding something better. What I have in place right now for file uploading still has plenty of bugs. You can go ahead and upload just one file or no files which I don't want the user to do. This just involves some validation that I have yet to implement, and I plan on fixing those things up as I go. These next couple weeks will be going primarily into pitching based on the brute force concept. Hopefully, I will be able to get this to work, because as it stands the algorithm will be easy to slide in I made a bit of progress this week. Most of the progress was realizing more of the smaller components that need to be accomplished to tie the UI and the backend together to get the project working. I was able to create two drag and drop boxes on the main page of my application, and these boxes only allow one audio file each and are clearly labeled with which audio file the user should be placing in which box. It's not my ideal solution, but, as of right now, it is the one that was easiest to accomplish and it does the job of limiting input from the user. So, I would call this week a success from the goal standpoint.

However, I began to realize that the next step of this process of tying the Javascript and Java together was going to be a bit of a pain. One thing that browsers are unable to do because of an obvious security risk is learn the full file path to a file on a user's system. They are only able to learn a file name. Because of this, I am going to need to start integrating the CompSci02 server into my work to upload the audio files the user wants to use so I can stream them for the manipulation that has to be done. This week, I plan to arrange a meeting with Dr. Pankratz to discuss this. Next, I do want to change the download feature that is currently included in the UI so it doesn't just download a composite wav file. I think that there should be an option to do this if the user wants to save their mix, but I also want the user to be able to save their playlist. This would be the paths of the two audio files (preferably a server location and not one on the user's system), with the pitched vocal file, so the playlist can be re-uploaded later and split up into its two wav files for editing and playing back. I feel that the download itself won't be too difficult if I figure out how to save the files to the server, but the re-upload will be a bit more of a challenge. I'm definitely getting into the harder work of the project, so progress is considerably slower than it was at the beginning. However, I do feel good about the progress that I have already made, and I feel that I have identified the areas that still need work. I am continuing to look into the actual pitching of a wav file based on pitches of segments, and I really hope to get an implementation done over spring break, as well as have uploading near complete. This week, before spring break, I am going to play more with the Java and pitching and I am going to discuss communications with CompSci02 with Dr. Pankratz. I want to be in a good position to get a lot accomplished over spring break. Not much was accomplished this week. I continued researching possibilities in pitching segments of wav files, which will be useful when I can get that into code. As I thought about trying to implement an algorithm this weekend, I realized that not only do I still have more that I want to look into (which I expected) but I also felt that I was starting in the wrong place.

I feel that, rather than trying to go for the gusto and get pitching a whole wav file to match another file working, I should start working to get the back end Java working with the front end Javascript. Not only will this allow me to see more problems earlier in terms of my implementation in the UI, but it will also give me more of a feeling of satisfaction in terms of getting things accomplished. These past couple weeks have been rougher for me because I have not been able to get as much visually accomplished. However, I have been continually researching and coming up with ideas to tackle the big problem in my project, so it is not as if I am doing nothing. I think that beginning to tie in my UI and Java work will be good progress. In addition, I feel that I do better when I have more concrete goals laid out for me like I did the first couple weeks. So, this week, my goal is to create a button on the interface that allows the user to upload their music track and their vocal track. Then, the tracks will be visualized in their waveforms. In the background, I'll detect the pitches of each file and save them in separate lists, so they can be changed later. I suppose the title might be a bit too harsh on myself, but considering how much progress I made in the first few weeks, this week I felt like I did absolutely nothing. Looking back on it, I have made progress by writing simple code that stores all of the pitches read from a file and puts them in an list, changing the -1 Hz readings to the most recent reading that was not -1. This is a good placeholder to start until I come up with a better algorithm for replacing the bad readings.

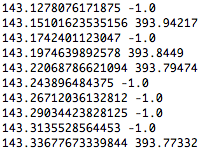

I also discussed the concept of filtering sounds with both Dr. Pankratz and Dr. McVey. They have both seen projects done before that filtered out noise to find the beat in the music. I'm doing something very similar, except I'm trying to filter out noise to possibly find the highest or loudest pitch. I'm still not quite sure how I want to go about this, but I at least have a place to start looking. Unfortunately, that's really it for my blog post this week. For week 5, I want to look into how I can write a .wav file, manipulating the pitches of an existing .wav file with pitches that I have stored in a list. I already know that I can pitch a file and write out a new one with a constant factor, but what if the factor isn't constant? This may not be something that I can get done in a week, but I really feel that it is my next step. Adjustments to my algorithm can come later. I was actually starting to doubt that I would be able to accomplish my goal for the week midweek, but I am excited to say that I did! This past week, I successfully detected pitch from a .wav file and wrote out pitches in Hertz.  I also found sample code that allowed me to pitch an audio file, reading one .wav file and saving it to a given output .wav file. It turns out, out of all the pitching methods, the McLeod Pitch Method is the best one for this project. I don't quite know why, but it has been the most consistent in getting me the most results. However, I encountered a problem that I figured was going to happen, but I now know a bit more about what form it will take. When working with an audio file, TarsosDSP obviously works best with a file that only has one pitch occurring at a time. However, it is hard to find a lot of music that works like this. So, when detecting pitch of what I would consider to be a "standard" music file (in this case, "Roses of May" from the video game Final Fantasy IX), we end up with something that looks like what you see on the right. The points where the program gives me a reading of 393 Hz is reasonable. That's right around G4. However, there are a lot of points where the program tells me it found a pitch of -1 Hz. This is because the algorithm used by TarsosDSP deems the track to be "too noisy" at this point to read one solid pitch. While I did expect this, I was unsure of was how this would affect the the output file if I were to attempt to pitch it. If it can't read the pitches, then what does it think is there? Turns out, it chops out the parts where the pitch was read as -1 Hz and makes a really interesting remix. This won't quite work if I am going to pitch an audio file based on these values. I may need to figure out how to pick and choose data points, and see when the pitch is actually changing. To end, my goal for this week is to get an idea of how I am going to deal with this issue. Should I ignore it and go for really simple audio files? I have a feeling that will be difficult to do, though, as even simple recordings of solo instruments will have overtones. Or, should I work on an algorithm that will replace the segments that have -1 Hz with the pitch before, and sustain until the next detected pitch? It may not come out as a complete match to the original track, but will it be close? I will have fun toying around with this. (Also, if you want to hear the "cool" remix I made, ask me. It'll be your prize for reading all of this!) I am happy to say that I was able to accomplish my goal for this week! I am now able to visualize waveforms for multiple audio tracks! I am almost positive that I will not always be able to accomplish my goals in weeks to come, so I'm really celebrating this one. Right now there is not a lot going on that is 100% mine, which is weird to me. I know that this won't be the case in the future, as I have yet to work on implementing the pitch detection algorithm of my choice and actually using it to manipulate audio files. In the meantime, what is going on is all from the Waveform Playlist Javascript library that can be found on Github. It gives me some editing functions for free and possibly a save function. I may tweak it so it saves a playlist object and, therefore, can reopen the playlist as a whole. Currently, it saves down the mixed audio track, which may be useful as well. This library can be found here.

My goal for this coming week is to implement a pitch detection algorithm using TarsosDSP and successfully pitch an audio file. I'm not even going to try to combine it with my current project yet. I just want to create a Java project that will take a .wav file and spit out a pitched .wav file, and read back to me the pitches it finds in the original file. Things will start to get more interesting if I can do this. I realize it's not Monday yet but I like to write out my thoughts, so more blog posts for everyone! Yay! Anyways, I've managed to get version control set up for my project. Git is wonderful, which I never imagined myself saying when I started learning how to use the tool. I have decided to go with Eclipse for coding, because I believe I may now stray towards the route where I am most comfortable which is a web application. I'm used to coding in Eclipse or some flavor of Eclipse, so this seems like the most natural choice for me.

Since I'm going towards web app, this means I will be using Javascript in addition to Java. I want to use a combination of the two, most likely Javascript to give the basic waveform and editor and Java for the backend that deals with the actual .wav files and data manipulation. This feels most natural to me. I still need to discuss ideas with Dr. Pankratz to make sure that I'm still on the right course, but writing something tells me whether or not I know what I want to do. As of right now, I'm leaning towards using an opensource Javascript library called Waveform Playlist to generate the waveforms of given audio files. I'm still debating on the backend. A couple things that I've found that seem interesting and may have what I need are TarsosDSP and SonicAPI. They have pitch shifting and utilize algorithms similar to that of a phase vocoder. I still have a lot of research left to do in this area, which is why I haven't made a decision here. Understanding the math that is behind the scenes here and what I will need to manipulate will ensure I do not pick a library that is too restrictive. Now that I've been writing for a while, I think it's time for me to go visualize some audio files. Hopefully I'll have some good news this weekend! This past week was the week that I have simultaneously been looking forward to and been dreading for the past three and a half years of my college career: the day where I receive my final project. Truthfully, I was mostly excited, but when you don't know what you are going to get there is always that fear of the unknown. However, I feel that I got a great project that suits my interests. Turning speech to music clearly appeals to the musical side of me, but it is also a very mathematical project. This final assignment will do a good job combining both my majors and my minor. It is also a good thing that I enjoy to learn (if I didn't, I wouldn't be a computer science major) because this project involves a lot of parts that are entirely new to me. I will be diving through Google and talking with professors to figure out how I will tackle this challenge.

As I write this, I am downloading NetBeans and getting my computer set up to code. I'm planning to do a comparison of NetBeans and Eclipse because, while I know that I want to write this project in Java, I want to pick the IDE that will make building a desktop application a bit easier. I've been doing quite a bit of research this past week, digging into potential pitch detecting algorithms and what libraries Java has available that may help me out. It turns out there is quite a bit that might be useful to me in the long run, so I've been bookmarking like crazy. My goal for this coming week is to be able to get a simple application running that produces the waveform of a given audio file. This will complete the first requirement specified in my project, and would be great progress! |

AuthorCaitlin Deuchert is a senior at St. Norbert College pursuing a Bachelor of Science in Computer Science and Mathematics. Archives

May 2017

Categories |

Create a free web site with Weebly

RSS Feed

RSS Feed