|

I haven't written here in quite a while!

I won't lie, it's been a real test of my patience to get everything together for the coming presentation on Saturday, whether it be other classes needing my time or me just wanting to skip to May 15th. But now I am happy to say that automation is done! Anything I do now after this post will be purely cleaning up what I have now. Though as far as I'm concerned, I'm just ready to wrap this up. I will say now that there are some things that I wanted to get to (my stretch goal of the bot being able to recognize new blocks during automation was not met) and things I wanted to do better (the instruction-making process of automation seems too brute-forced for my taste, though it is legible even for a beginner coder to understand). Nevertheless, me figuring out how to do automation with a language that I'm not exactly a fan of (I used JavaScript for the bulk of it, and I still hate the var data type) I say was a win. Plus, the automation isn't a "one-trick pony" i.e. it doesn't require you to reload the webpage to run it again. Now, the biggest thing on my agenda for this course is to prepare my presentation and all of the remaining documentation for the website and code. I know that there is some pre-existing documentation of the set-up for the bot that I can use, since not a whole lot has changed when it passed over to me. Ultimately, I need to keep on telling myself "May 15th" and then I'll be home free, though I am already at home!

0 Comments

This week I believe I was able to complete the file formatting for my automation (i.e. Step 2). The bot uses a micro SD card underneath the Wi-Fi module for file I/O, so I need to get a reader in order to load it with my DAT file and run it. I already know how to interface with the micro SD card, so that won't be an issue. Also I have confidence that my code for it is up to stuff, so I'm not worried at all of getting it to work.

It's been interesting with using C and file reading, especially since I feel that C++ makes it less strenuous to read given that you have a string class to use, while C has only char pointers and arrays. I had to make some changes with my file formatting given my inexperience with C, where originally I wanted to use ordered pairs in my formatting, but instead I have it where only digits are used per line as a means to denote things the starting row, starting column, ending row, ending column, etc. This I know can be fixed with understanding char pointers better and made more simplistic for any user to understand. The grid itself is easy enough: just have characters lined up and read top to bottom, left to right. I've also begun work on the algorithm for automation. Currently, I'm trying to make every possible case (e.g. destination is upwards, you're facing left) and then I'll merge them together to form something more concise. I'm then hoping that when I begin to work on blocks that most of the heavy lifting will have been done, especially with the overall skeleton of the algorithm. Now that I have something to write, I can finally update my blog.

Last week was our semi-formal walkthrough of our projects. I kept code to a minimum and instead focuses on an overall game plan that I wish to pursue. Given that I really need be focusing on automation for this project to succeed, I devised a seven-step plan:

I've already completed the first step with knowing the physical capabilities of the bot, where I need to worry about remembering the chopped-off decimal values in distance calculation as to correct myself. Though this only becomes dangerous when the bot has to travel long distances, it still needs to be rectified in either steps 3 or 4. Overall, this is a matter of sitting down and actually doing it, rather than only speaking about it. However, even speaking about it, I do feel confident in my abilities to complete step 6 and have something for step 7 by the time of presentations. No major updates this week other than that COVID-19 has made it so that all of us won't be returning to SNC for the rest of the semester. Though those around me have voiced concern about all of the changes that will have to be made and the like, it is still inconclusive to me how I should be feeling given that Spring Break has only just ended, and I've yet to truly see how my education will have changed. Nevertheless, I will try to remain pragmatic and undeterred as I finish up this semester while three hours away from the classroom.

In any case, I will be having to find a time in which I return the bot and keys back to SNC. Regardless, it will be important for me to keep at the project, even if I am doing it from the comfort of my home for the rest of the semester. After meeting with Dr. Pankratz and talking with my dad, I believe that I have a solid game plan of how I should start doing automation:

Overall though, I do feel confident in knowing that I have a solid game-plan ready, especially with automation. Not much has happened since last week, but work has gone into figuring out how exactly I'll be doing automation.

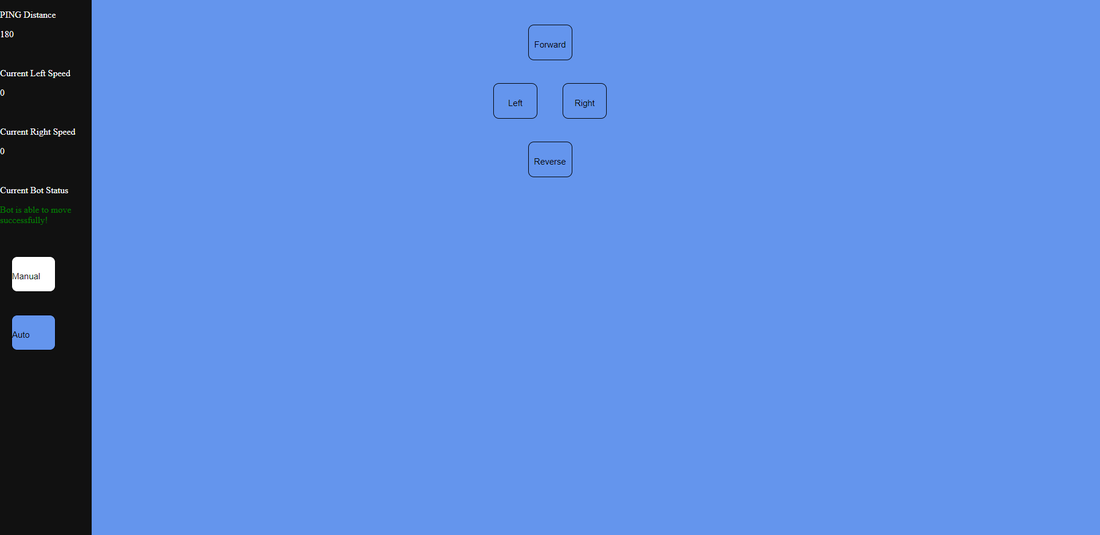

Instead of having the bot wander around the room or focus on colors, I have a plan of simply specifying a location on a grid (specifically the center of a cell) and for the bot to move there. To measure distance, I can use the wheel encoder ticks (there are 64 wheel encoder ticks per revolution of the bot's 208mm circumference wheels; each tick is 3.25mm). I plan for starting out to focus on movement without obstacles, and then start worrying about such things as obstacles and alternate paths. I will be meeting with Dr. Pankratz this Thursday to talk more about the automation, and I think that I have a good proof of concept to show him. Best case scenario right now is that I have a game plan before Spring Break so that I can start working on some automation back home. Thanks to pre-existing code, I was able to finally build the HTML controller for the bot to my preference. Unlike the previous iteration of this project, the controller works by holding down one of the directional buttons on the webpage to move the car forwards and backwards, as well as to turn left or right. Given the speed at which it turns, the car is omnidirectional: something that I really wanted. Releasing the button causes the bot to stop, and though the stop can be a bit sudden (the robot's back end lifts slightly up and then falls down), it isn't anything major and should take little time to correct if need be. In addition, the manual drive also uses the PING sensor to detect objects directly in front of the bot. If the bot is in ten centimeters of a direct hit against an object, it will stop it its tracks and allow the user to move backwards for it to safely avoid or move away from the object. With the manual drive essentially done, I can really start thinking about automation. An idea I was brainstorming with my Dad this weekend was using color sensors as a means to find marked waypoints on the lab floor, or that of a specific object. I will have to talk to Dr. Pankratz about doing something now rather than later. Preferably, I'd like to do something simple like finding a specifically colored object, but I know that the limitations of the bot and my knowledge of it will be crucial in what is to come. On Sunday, I was able to establish a connection to an HTML web page with the C programming of the bot using code previously used by Nathan (I of course will cite him in my code's documentation and add the pre-existing documents that I've used so far from him as resources in the aptly named Resources page). Not only that, I was able to get the bot to move with simple commands inputted from HTML buttons. And although it is not a finished product, I know that my code's logic so far is sound, as well as comprehensible. This week I also talked with Dr. Pankratz about the manual and automotive driving. For manual driving, he suggested for me to use Nathan's manual driving app as a means to get ahead of schedule. Though I know I'll be using a good deal of what was previously done, I know for certain that I'd like to make some modifications for a more intuitive control scheme, especially with how the bot accelerates and decelerates. For automated driving, he suggested that instead of using the tiled carpeting of the CS lab as my geometric mesh that I try using the ceiling tiles given how easy it'd be to gauge where exactly the bot is. This however comes at a disadvantage with needing to install a second PING sensor onto the bot for it to look upward. Thus, given my progress so far, I will continue to work on making the manual controls to my liking as well as keeping in mind how exactly I'll be doing the automation. Right now it's been a lack of exigency that's been my Achilles' heel, but I know that just around the corner is what will make me go into high gear. This Thursday, all students had to present a rough draft of what we have planned for our projects and what steps we are taking to go in that direction. We would then solicit feedback, tips, and direction from each other as well as from the professors. In doing my session, I know for certainty that the hardest parts of this capstone will be

Thus, for next week, I will focus on making a rudimentary HTML controller and accompanying C code for the robot. If I can make it move by the end of that week, I know for certain that I'm doing something right. This week, I was able to get the robot used last year from Dr. Pankratz. The robot has thankfully been kept assembled from last year, so although I still need to make sure that nothing of the robot's hardware has been changed (Pankratz told me that two others were using it), I was able to connect to the robot via Wi-Fi and run a function to have it rotate 90° clockwise. On my agenda, the next big things are in order:

Luckily for me, there is plenty of documentation from both the Parallax website and Nathan's time on the project for me to pursue and formulate how I want the robot to function. |

AuthorAlan Deuchert is a senior computer science major from St. Norbert College in De Pere, Wisconsin. Archives

May 2020

Categories |

Create a free web site with Weebly

RSS Feed

RSS Feed