Weekly Project Blog

Week of January 27, 2025: The Goldilocks Game

Given the requirements for my project, I decided to pick Connect Four as the game for my AI player. It was important to choose a game with a small enough board to be able to model yet large enough to make things interesting. Additionally, Connect Four was the Goldilock option for a game with just the right amount of complexity. Throughout this week, I worked on creating a simple playable version of the game in C++. This version of the game had a simple AI player that could recognize wins and blocks.

Week of Februrary 3, 2025: Off to a Good Start

This week was important in creating a worthwhile AI player. Rather than simply playing randomly while looking for wins, this new version of the AI player used a MiniMax algorithm to "look ahead" as it plays. The specified depth of the MiniMax function simulates the corresponding amount of future moves and assigns a score to each board state. From here, the function is able to determine which gives the AI the most advantage while also giving a disadvantage to the opposing player.

Week of Februrary 10, 2025: Introducing the ANN

Throughout the week, I met with McVey and Meyer to ensure a quality start to the artificial nueral net gameplayer. The ANN player will be a subclass of the MiniMax player so that it can inherit a lot of the same functions while using the nueral net as the hueristic function. In addition, I added a soft max function to the MiniMax which will introduce a small amount of randomness to the player. This will help the player choose between similarly scored moves.

Week of Februrary 17, 2025: Planning the Learning Process

At the beginning of the week, I spent some time implementing some of the important parts of the ANN in the code. Now, at this point the ANN player is working but since all the weights in the matrices are set to one, the player is really bad. The second half of the week was spent planning with Dr. Meyer how to properly run the code and alter the weights to improve the player. This whole process is still confusing at this point, but I will make small steps towards completing this process.

Week of Februrary 24, 2025: Preparing to Learn

Throughout this week, I rewrote and cleaned up portions of my program to make it better for the learning process ahead. For example, rather than the user being able to choose the type of players, the program now immediately runs a specified number of games between two MiniMax players. Additionally, the code can now read in a board state from a file and write out the calculated winning percentage of that specific board state. Looking into next week, this information will be used in the mathematical portion of the ANN learning process. Other improvements in the works include a board class for easier use of functions, writing depth of the constructor of MiniMax for testing, and determining the proper interpretation of tied games.

Week of March 3, 2025: Figuring Out the Math

This week, I made finished up some more alterations to the code so that in one run, the program creates a set of random boards from simulated games, then plays a thousand games between two MiniMax players to get an estimated winning percentage, and then compares the percentage to the output of the ANN which we will use in the learning process. Outside of the code, I have been working through a small example of a nueral network to ensure that I understand the calculus required to set up the learning process. This will help to minimize the chance of errors when writing the program next week.

Week of March 10, 2025: Completed Model

Throughout this week, I worked on completing a small model of the neural net that explains how the forward and backward propagation works. I thoughout about having this picture on display here but I think it makes more sense to eventually put under the Project section of this website. This will help viewers get a better idea of the scope of this project since much of the work in done behind the scence and not easily seen in an interface. Also, as a note of the work done over spring break, I updated parts of the code so now all the weights and node values in the nueral net can be read in and out from a binary file. In addition, I set up a function that would eventually become the back propagation function to update and save these weights.

Week of March 24, 2025: Ready to Train

The long process of trying to understand and implement the training process now has a light at the end of the tunnel. Prior to the meeting this week, I implemented the learning process for the first two layers of the nueral net. These two layers are a little more specific but any layer after that can be generalized as it uses the layer prior. In our meeting this week, we went through the general case on the board so that I now understand how it will work in my program. Additionally, I have done work on the program so that it uses a learning set of ten boards rather than updating the weights after each individual board.

Week of March 31, 2025: Cleanup Work

In its current state, the back propagation function "does a thing" but it is hard to know whether or not it is working properly. I am having issues with the array changes too drastically which actually increases the amount of error in the nueral net rather than decreasing. This leads me to believe that something is not working properly and the function will have to be tested and fine-tuned. I plan on working on this trhgouhout the rest of the week and hopefully it will be working properly by the weekend. The rest of the program is set up properly to allow for a specified amount of boards in a learning set which will alter an array of updates and then be updated at the end of the learning set.

Week of April 7, 2025: Not Converging

It feels like we are getting closer to having a working back propagation function but I am still running into issues. The rest of the program is completely set up to be able to run besides a small portion of the back propagation function. In order to test it this week, we tried running the program using the same test board over and over. Ideally, we would be able to see the result of the ANN function converge to the expected value for the board. Rather than converging, we were seeing a an error value that initially seemed to be working but in extended scenarios, the result of the ANN would eventually converge to zero or diverge to infinity.

Week of April 14, 2025: Working on Limited Boards

As mentioned in the previous blog post, to test the back propagation funciton, the program was setup to repeatedly train on the same board. We did this in hopes of seeing that the ANN would "master" that board and we would see the value of the error go to zero. This week, I was able to get this working so I set it up to do the same process with a set of five boards. Fortunately, the ANN was able to learn all of these boards at the same time. From here, we should be ready to set up the program to run on a much larger learning set.

Week of April 21, 2025: Set up to Run

Since the ANN has proven to work on a limited set of boards, the program has now been set up to run indefinitely with random board inputs. Since there are about two weeks remaining before the final presentations of the project, the program will use most of this time to run. It will be interesting to see how quickly the error will start to decrease and how strong the ANN will be playing Connect Four.

Week of April 28, 2025: Running

The program has been continuous running for about a week at this point and there has been some small improvements made in the play of the neural net. I have also seen the error be decreased over iterations that will be interesting to show for my presentation. I have spent time this week talking to Dr. Meyer about how to properly present my project while also keeping it understandable to the average audience member.

Week of May 5, 2025: Presentation Complete!

For the most part, I am happy with the way that demo day went and think that my final project turned out well. This week, I am working on cleaning up and commenting on my code before the final version is uploaded to my website. This has also been good preparation for the defense coming up to ensure that I understand all parts of my program.

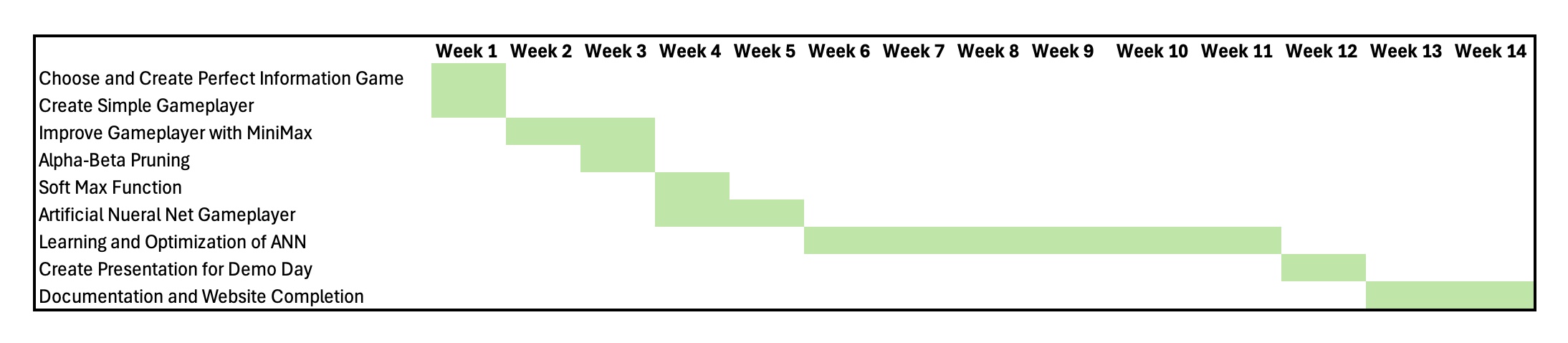

Project Schedule