Date: 4/26/2012

Current

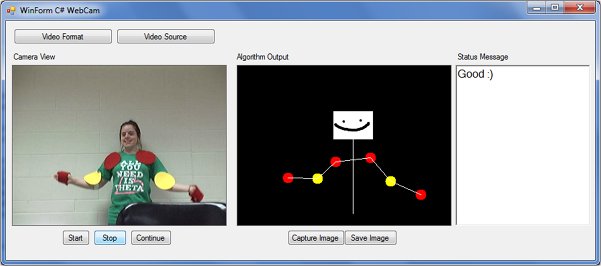

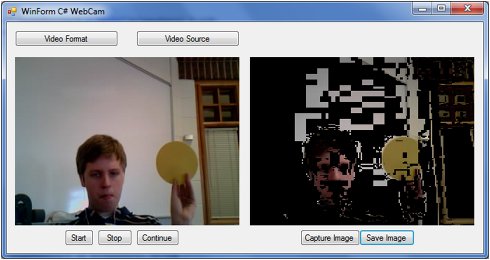

In the last stretch of the project I have finalized my project code. I have modified my red pixel check to be better and now can handle more lighting situations. I have also built in and corrected all my error messages that I am looking to alert the user with. This will try to inform the user in how to correct their situation so my program may draw as it is expected to. I have commented the final product pretty thoroughly in hopes that the person after me will have an easy time picking it up and adapting it. One of the final touches that have been added was a face and a neck for my stick man. This was an added touch by Sam Bonin an art major that was a great assistance in my program. She was my guinea pig that did all the action in front of the camera while I did my coding and adjusting.

Product demo for my final presentation.

Presentation.pptx that I presented on Monday 4/23/2012.

Other last minute adjustments are that the background worker is only kicked off when it is not busy by the timer, so it’s done. Also the tic of the timer which was set to a second is now reduced to a quarter of a second. Because the programming is checking more it is allowing for the process to be very real time with minimal delay.

Moving forward

Now time to finalize the website, clean up all my stuff for my project, and get my binder together for submitting. I am looking forward to senior defense.

Date: 4/16/2012

Current

Reflecting back on the process I had come to use now, I would like to go into further detail of what has happened in the last week or so. I originally was having issues with my arrays and how to use them in my process. When the AForge function call to find all blobs returned the blobs to my array, I was then splitting the blobs into two sub arrays. One array was for the limb end point blobs and the other for the bend point blobs. At the time, the arrays were for my orange dots were the end point blobs and the bend point blobs are still my yellow dots. I have since switched to red dots instead of orange dots. This allowed me to stop picking up the head, because the color range I was trying to detect happened to be also detecting my skin color as well.

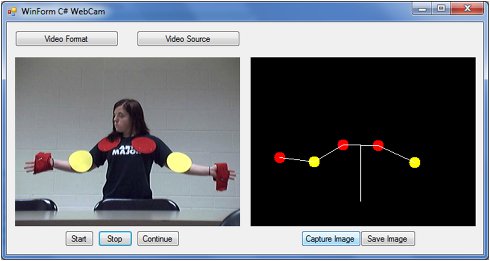

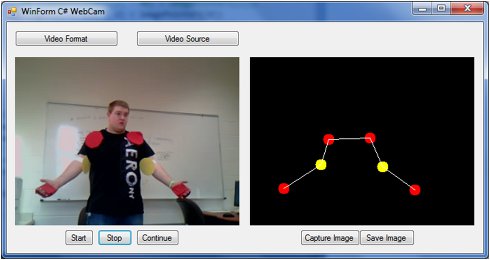

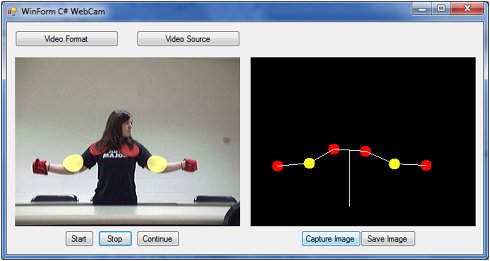

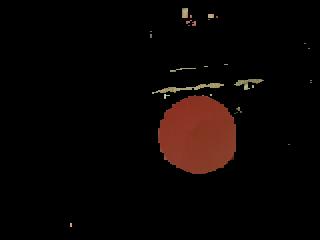

As you can see below I am constantly fighting lighting issues in order to get the object to show up. I have got a solid range for my red, but the more I open up what is acceptable the more that I run into the issue of allowing more noise to occur.

Through refining and use of different environments you can also see that my algorithm is fairly successful at capturing.

Once I had started to be certain I could capture the dots better and plot the points the way I would like to, I have started to add other parts of the stick person to my display.

So here you can see that I have the body being shown and I would like to also create a little head for my stick figure if possible to complete my stick figure. Although I would like to incorporate more 3d motion and track more sophisticated motions, I have not figured out how to graphically represent that and must stray away from implementing that concept so late in the semester.

Moving forward

The goal as stated before is to build a user feedback function to hopefully enforce proper connecting of the dots. By telling the user what the issue that my program thinks is occurring, I am hoping to create a much friendlier product.

Date: 4/15/2012

Current

It has been some time since my last blog. Since the last blog, I have decided to no longer utilize my own approach for blob processing. I have implemented what was available through AForge.net libraries. These libraries calculated and found my blobs, stored the average color, the key points I needed to plot the center of the dots, has also provided ways to determine blob size through the area property allowing me to filter out noise, and lastly has provided a nice erosion function to help reduce noise as well. I have been a little bummed I did not realize this existed sooner, but I am grateful to have explored it on my own first because I believe I learned much more. I have also replaced the orange dots with red dots. I have changed the dots for the hands to be bandana like to account for a less restrictive range of motion. I am still trying to find something for the yellow that I have. I have also started to draw lines connecting the dots and trying to enforce rules for how to connect and how to flag the user to adjust themselves so my program can continue to be successful.

Moving forward

I need to finalize my functionality of line connecting and telling the user that something needs to be adjusted. I am also finding that to capture a 3d motion may be not attainable with the time left as I struggle a little in how to alert the user of issues. I also would like to update my sources page to account for all the snippets I have referenced. I will also be posting more media as to the work in between now and the last blog.

Date: 4/3/2012

Current

Over the last few days I have been playing around with this recursive concept of trying to find the key points of a blob/my objects I am trying to detect. I had gotten the recursive function written up and just as I was about to test, I decided that I would need a blob class that would store the left most, right most, top most, bottom most point, and the color of the blob that I found. While doing so I started to implement this class and stumbled upon the fact that Aforge library has a class and functions that do exactly what I was trying to do. Thus I decided to not reinvent the wheel and use what they had. I started playing with some of the functions and found that I could get the blobs extracted with a simple function call and from there I could access data about them like the rectangle that would surround it, thus giving me all the key points that I was looking for. I found other functions that I was not certain about like the center of gravity of a blob, which I interpreted as to be about the center of the blob because it returned a point (x,y coordinates). When trying to plot what it returned on my image and draw circles I got an unexpected output. It was not the center of the blob but something else. I am not really sure what it is because I did not dig so much into. Although further digging revealed that I can get an average color of the blob, and the rectangle. Thus I have all that I need to plot the points in the center of the dot objects and know their color upon asking.

Although while testing I realize that inconsistent lighting environments make it tough to accurately capture the blobs. Through missing parts of the object because the color of the pixels is not in my expected range I erode and occasionally created additional blobs, or even start with blobs that are really a part of the dot but are separate for some reason.

I am learning quickly that my orange range is capturing my face. I think the sun that I have gotten in the past few weeks has me showing up in my search algorithm. I have resorted to using a ski mask to try to eliminate that issue.

Moving forward with testing I am now omitting blobs/noise that does not have an area at least 100. The area of the blob is a property of the blob class. I have also decided to no longer keep drawing the exact output that is left behind when eliminating the colors to get the blobs. When I chart the blob centers that are present I have decided to also chart them on a new bitmap, and only put them where I see there is a blob of big enough importance to me. I have also done a simple check to see if the average color of my blob, another piece of data captured by the blob class, is yellow and if not the program assumes it to be orange. Once I know the color I draw a small ellipse on the output bitmap with the corresponding color so I can see what it is calling the recognized object.

Moving forward

Looking to connect the points in desired manor so that I stick person can be drawn properly. Also work on error handling associated with the not being able to draw the person.

Date: 4/1/2012

Current

Last week was hectic and I was able to start working on my blob processing as well as get together with Dr. Pankratz and meet. We discussed not necessarily integrating the KTX humanoid, but instead solidifying what I have. This entails capturing and charting all the key points of my body and then creating an animated stick man that is doing what I am doing on the screen. This will serve as a proof of concept. The only part that would appear to be missing to get him to mimic me would then be the recognition of the poses that if finds and the sending the command to execute it. Dr. Pankratz and I also discussed trying to get more of a 3D plane and the calibration phase where the program will try to learn about the individual that it is looking at and adjust.

Moving forward

This week is focused on making sure my recursion is working properly, working on the 3D plane concept, as well as addressing that initial calibration phase. I think I will focus on two, my recursion and the 3D plane, and figuring out how to adjust my setup to account the different types of movement and the displaying of that movement.

Date: 3/26/2012

Current

Finishing up last week I was able to meet with Derek one more time to discuss ideas and direction for going forward. I have decided to use erosion heavily to reduce noise and leave only colored objects I am looking for. This does two things for me: allows me to get rid of noise that may confuse my program, and secondly, allows me to reduce the size of the blob. The reason why this is important is it will allow for me to maintain my speed. I don’t need the shape of the object I merely need to know its location and pin point the middle of the location for charting the limbs out.

Moving forward

Once I have the heavy erosion working I will use recursion to find the blobs that are remaining and get their extreme points (left most, top most, bottom most, and right most) and use them to calculate the middle of the object. Then using the fact that the end points of a limb are color coated with orange and the bend point is colored yellow, I will draw lines to show the pose for the limbs. Also, today is the day I get the robot and get to start working with him. I am rather excited.

Date: 3/(20&21)/2012

Current

It seems that my preliminary test for the HSV format seems to not be as simple as I thought it would be.

I have chosen a range of hues that I would consider to be yellow and as you see I have lots of noise thus far when trying to detect something as simple as yellow. I also have found that lighting is still a huge factor as it affects the hue. Brightness could cause the hue to be easily recognizable as yellow, but a darker lighting causes me to get hues that may be common associated with brown and other colors, so I am forced to be more concerned with this very simple approach. I am hoping that I can look into other formats such as YCrCb and see what’s happening there. I am hoping Derek can lend a hand. The other danger with this approach is that the more I spread the range of my hue to handle the different brightness the more that I come into issues with finding more and more noise.

After meeting with Derek and discussing my project and ideas, I was able to discover how the relation between RGB values when the color is yellow and orange. So I abandoned the hue idea and decided that simple may be the better approach.

The most extreme color of yellow can found by making Red 255, and Green 255 with some sort of blue value that is significantly less. What I found was as long as red and green were close in value and red was greater than or equal to green, I got some shade of yellow. I also noticed that green had to be greater than blue for me to eliminate unnecessary noise and other colors. Below you can see the improvement on the yellow.

As far as the orange object, I was not sure how to start my analysis of the RGB relation in order to generate a set of constraints to only show that object with minimal noise. With the help of ms paint and a few pictures taken of my orange shape in different lighting I was able to draw the constraints up for the orange shape. First red must be greater than green, and green must be greater than blue. Red also must be much greater than the green, playing around with it, I chose that the red must be about 60 bigger for the orange object to be the only thing highlighted be these constraints.

Moving forward

Now that I can trace the objects, I will filter out the noise probably doing some variation of erosion so that only the objects remain that I am interested in. I will next then undo the erosion on the objects that remain to properly plot the center of my objects for connecting them.

Date: 3/18/2012

Current

I have done research on detecting objects within an image. I have come across a color space called HSV. Where H is the hue, S is the saturation, and V is the value or intensity of the color. What this allows me to do is select the hue my object fits and from there establish if the object is present not by looking for an exact color but looking for shapes that fall into that hue, within a range of value or intensity. The intensity will change due to more or less light, but the hue will remain the same. This will hopefully allow for an easy identification of the object. From there I will be able to figure out, based on the hue of the object captured, which part of the body or limb I am looking at. As stated earlier in my blog the start and end of my limbs to be tracked will be one color, while the bend point will be another. This approach should allow me to find the objects quickly, plot a point in the middle of the object, identify it, and connect them accordingly creating a pose for that limb.

Moving forward

Implement what I have found and after playing with it, possibly meet with Derek to gather further insight. Also to get the robot this week so that when I figure out the pose I can implement it.

Date: 3/14/2012

Current

Today I did my walkthrough and presented what I had thus far and where I was planning to go. My presentation can be found by clicking the link below.

Some suggestions I received about how to go forward with my project were:

1. Use the color format YCrCb to help avoid brightness.

2. Possibly introduce depth perception to my project by using objects. I would know whether or not to move forward or backward if the object grew in size or became smaller. This would allow for a wider range of motion.

3. After I am able to map the blobs and chart line of where my limbs may be, I could use those lines to recognize poses. So instead of mimic the exact motion, look for poses and do the poses instead.

4. Use flag objects and distinct colors to help my program recognize key points on my body that could track motion/poses. Maybe motion is not the approach but pose identification with the help flags to show key points.

Moving forward

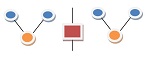

I have decided to implement the last suggestion presented to me, which is to use flags or some sort of. color scheme to identify key points on my body. This would allow me to build the shape of the limb a lot easier as well as identify poses a lot easier. As you can see below, we may have some sort of object to distinguish what the middle is, and then on either side you will notice that there are 2 blue dots and an orange dot.

The blue dots represent the start and end of the limb and the orange will represent the bend. This will allow me to know where the arm starts and ends if it stays on its side, as well as build the shape of the arm a lot faster because I know that all blue dots connect to an orange dot. So going forward, I am going to try to start implementing this concept and try to get a prototype to detect the color blobs as well as draw the lines to connect/map the arms.

Date: 3/5/2012

Current

Today, McVey and I went through my code in which I was able to be probed with the right questions to get past my issue. We resolved why my program had started to become choppy. This was because the picture resolution was pretty high so it took the program longer to process the events associated with the image. So by reducing the quality of the image I was able regain the speed of the application.

I also was having an issue when trying to implement my motion detection algorithm because I was not getting it to color my bitmap properly where motion was detected. Through some thorough investigation it seemed that I needed to have my two gray scaled images being processed and compared in a read only mode, a copy of the color image original color image to fill in the pixels that had no change, and then needed to build a bitmap that is built from the color one where no motion was detected, and color red where motion was detected. With these four bitmaps, I was able to compare and say if the difference of the gray scaled image is large enough then color that pixel, otherwise keep the original one.

Moving forward

Current issue now is to handle motion detection as well as refine the amount of noise that is being generated from time to time due to lighting changes. I would like to start associating events to where motion is located along with direction so that when the robot becomes integrated all I will need to do is to cause the motors to act within those events.

Date: 3/4/2012

Current

This past week has not really been a setback, but stuck due to some minor thing I am missing within my code. Over the course of the last week I have tried implementing my variation of motion detection as well as the example that I had found in my prior project. I for some reason am not getting either to cooperate with me. I have gotten the image processing and gathering to be in a separate thread fairly easily. I also have ensured that the main thread is the only thread updating the UI by creating an event that is raised when I am done processing comparison of the old image with the new image. I need a fresh pair of eyes to share some insight into my code for I have spend far too many hours looking and not really moving forward.

Moving forward

Get a fresh set of eyes on this.

Date: 2/27/2012

Current

Thus far in my project to summarize, I had started with an example of motion detection using video. What I found out was how hard it was to try to strip out what I didn’t want and add the additional functionality I did want to a packaged example. The issue was getting a hold of the frames so that I could do what I wanted and raise the events I wanted. Since meeting with Dr. Pankratz, I have ventured off down a different path. My current path is a simple program that captures images and sets them to a picture box. I have implemented a timer that is capturing an image every time the timer tics. The intervals are about 1 second. That was initially okay, but as I started to implement a motion detection algorithm the timer event has slower the UI. Which I suppose is not an issue, because when I implement and integrate the robot I may not have the UI. Although to try to make sure that this will not be an issue down the road I am going to implement my image processing in a thread to not tie down the main thread. My current tasks are to get motion detection in my current project that I have. What I am running into is the code that I have adaptive from my first try is not behaving as I had anticipated. I am looking into what I may be missing, or trying to see what I may be doing wrong.

Moving forward

Keep working on the motion detection algorithm and see if I cannot just use what I already have from the previous program. Also try doing the heavy lifting in a separate thread so that the main thread is not bogged down.

Date: 2/22/2012

Current

Thus far this week I have decided to build my own motion detection and am working to grayscale the picture. I have found a snippet of code that uses pointers to traverse the bitmap and average the pixels.

Moving forward

Keep working on detecting motion by comparing the pixels. Simple motion detection is really what I am going for and then raising events based on place of motion. Also I was informed there was image processing books in the library. Off to get those before work tomorrow.

Date: 2/19/2012

Current

After meeting with Dr. Pankratz, we were able to determine that they example I am using for my project base is much too packaged. I need to seek out something simpler. Based on previous work, all I really need is the ability to capture a photo and have a handle on that photo to do what I want with it. I have found a nice clean example on Thursday and have been exploring it on and off. I have been able to easily implement a timer to capture pictures periodically as well as am able to grab a hold of the picture and use it for what I want. This is a big step as I can now look to start implementing a motion detection algorithm. I may try to use what I had in the previous example as a basis, but I am considering building my own. All I really need to do is remove the color from the images before comparing them and then compare the pixels and as if there is a big enough change; a change bigger than my threshold. Once I have done so, I should be able to identify a region and raise and event associated with that region.

Moving forward

Plans for this week will be to work in the motion detection I had and get handlers that will assess change in my regions that I will designate to a body part.

Date: 2/15/2012

Current

Still having troubles making the example I have found my own. I have figured out how to draw a horizontal and vertical line on the image so I can see my regions clearly. Although while trying to build events for when motion occurs in these regions I have hit yet another wall. The code that looks at the picture and marks it up is a class that is inheriting/subclass of IMotionDetector. So when I add an event handler and try to set it, I am not able to. Also, I have the concept of how to detect motion and direction, but not sure even where to begin. I am eager to get something rolling. I have a meeting tomorrow with McVey.

Moving forward

Asses how to get proper events set up and keep at detecting motion within in the sections raising appropriate events. My goal is to start associating direction so I can assess how to move my robot.

Date: 2/13/2012 Evening

Current

Today has been very fruitless as I feel not sure where to start. I am fully dissecting the example I found online. I am not taking anything out, but I am trying to start adding some pieces in. What I would like to add to the project somehow is to draw a divider for my regions. This will allow me to see my regions. Next try to just look at one region and display an alert every time movement occurs in there. I am just hitting a wall tonight.

Moving forward

Keep working on breaking up the image into sections and detecting motion within in those sections raising appropriate events.

Date: 2/13/2012 Morning

Current

Late last week I stumbled upon some nice simple projects with motion detection examples. I have documented my understanding of motion detection as I tried to interpret their code for myself. I believe I have a pretty good foundation to start breaking the image down into regions and associating movement in those regions with a body part.

Moving forward

This week’s plan, break down my region into four parts and have the interface tell me when a region has changed, or if multiple regions have changed and identify the limb associated with that region. Once that is in place, refine it make events happen and try to get it to better depict what is happening with that limb; whether a limb has moved up or down.

Date: 2/7/2012

Current

I have reworked the website to get rid of minor things that I was not liking. I changed the content size as well as have started to implement my timeline. I have also got a hold of McVey and will be emailing her the issue that I am experiencing.

Moving forward

Continue on yesterday's plan and move forward into testing of that algorithm once I have resolved my issue.

Date: 2/6/2012

Current

I was able to find a piece of code that worked with the camera and able to take a picture. I have attempted to incorporate my initial motion detection algorithm, but I believe syntax is holding me back or something I am not aware of. I plan to seek out some help from Dr. McVey. I tried getting it to take the class, but for some reason it does not like the syntax of the file. I have the utility library that it came from and maybe I can just reference it from there somehow.

Moving forward

My next goal will be to test the algorithm that I had found for doing motion detection after getting past this minor issue. I am really trying to get a proof of concept. Once I am able to merge the code, I am looking to try and switch the event from button driven, to timer drive and no longer store it but instead use an old, new image system to process whether or not a change has occurred. Once I have an algorithm that recognizes it, I will try to manipulate the concept to see if I can start splitting my image into planes and start associating planes to body parts. This is all conceptual right now, but this is my plan of action.

Date: 2/1/2012

Current

Launched and tested website. Success!!!

Looking at Eric's work, and reworking my resume. I am still working to find some open source motion detection algorithms that will be helpful to the project. I believe C# will be my choice language. This is due to the fact that the concept itself is very event driven, also the language itself has vast libraries that will be useful when doing this. Have a lead @ http://www.gutgames.com/post/Motion-Detection-in-C.aspx

Moving forward

Dig deeper into motion detection algorithm and look much closer at what Eric Vanderwal had done last year.

Date: 1/31/2012

Current

Website has been worked on and progressed smoothly. The initial reaching out has been completed. I have already re-worked my resume and am reviewing it with Dr. McVery tomorrow 2/1/2012 (tomorrow). I have also reached out to Dr. Torimoto and asked if she would be willing to work with me in understanding the KXT's documentation which is in Japanese. Lastly I have met with Pankratz briefly to discuss the motors that need to be replaced. They have been ordered and will be here soon.

Moving forward

Dig deeper into motion detection algorithm and look much closer at what Eric Vanderwal had done last year.