|

Well just like that. This morning I presented my capstone in front of my classmates and some faculty. I felt like the presentation went pretty well. I almost forgot to present my application of convex hulls, namely the disease containment area but Logan saved my bacon. It is a strange feeling that after all the work that I have put into the project, it is all over. Next Monday I have capstone defense and shortly after that I turn in all my files in a binder and then my journey as a CS major at SNC is complete. What a journey and the only thing I have left to say is thank you to Dr. Pankratz and Dr. McVey for all that they have done over the last three years to help me in computer science. Thank you also to all my classmates for making the past three years enjoyable.

Signing off for the last time, -01001101 01101001 01101011 01100101

0 Comments

This past week has mostly been focused on getting ready for the research forum poster presentations. I am pleased to say that the poster presentation went very well. I have not made any additions to the application. If you want to see my poster for the poster presentation, I put the pdf version of it in the project files section.

This next week will have me beginning to put my capstone presentation together. This should be rather easy since I have had to explain my project and discuss how I implemented it several times now. This is good because I have a linear algebra poster project (how Google works) that I should get going on this week. -01001101 01101001 01101011 01100101 As the weather continues to become increasingly nice outside, it is a reminder that the semester is coming to an end. In terms of projects, that means that the program's i's should be dotted and its t's crossed. That is for the most part what I have been doing this week in terms of the app itself. The app is at a point where it presentable.

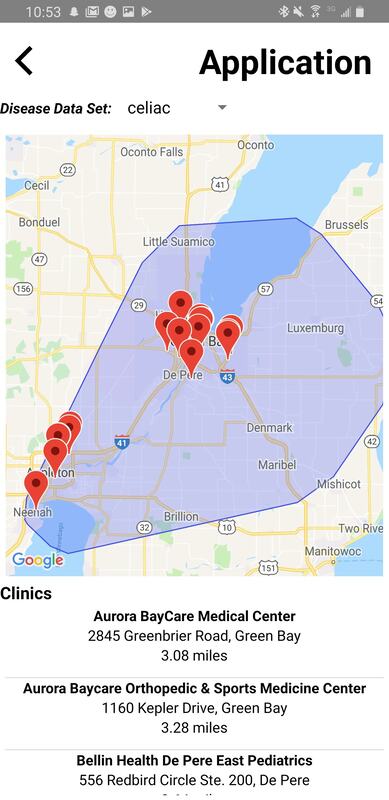

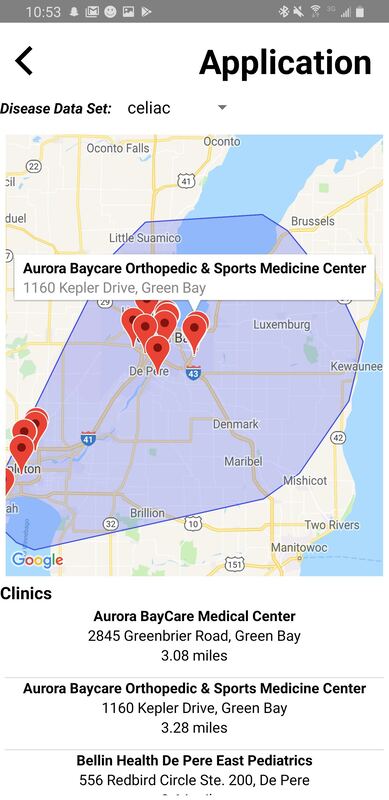

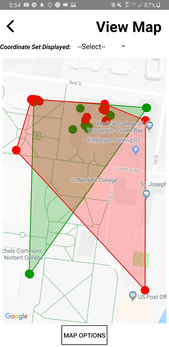

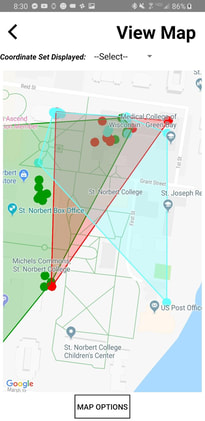

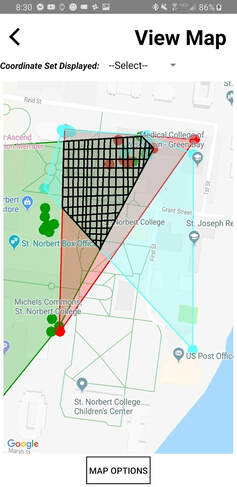

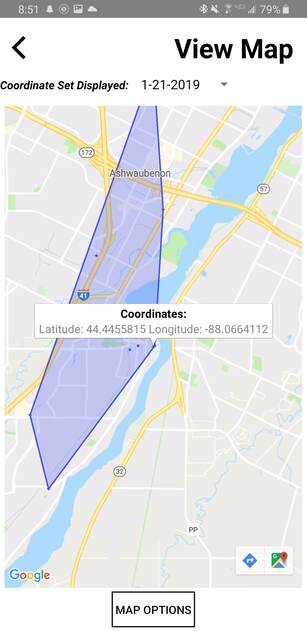

Most of my effort last week was on designing a poster for the Undergraduate Research Forum coming up on April 12. Having run the poster be Dr. Pankratz and Dr. McVey as well as a few friends, all of whom gave some useful feedback, I submitted my poster for printing. The poster has been printed and it looks clean and everything is visible rather easily. There is nothing that really needs to be done on my app. I will continue to try to work out bugs but functionally, everything is where it needs to be. -01001101 01101001 01101011 01100101 With the Research Forum coming up next Friday and the need to get a poster put together I decided to hunker down and get my application completed. My application does everything that was discussed in previous blog post in a fairly clean intuitive UI. The first step of implementing the application was creating a new activity inside of the Convex Hull app where the application would be housed. This activity is very similar to the view map activity in that it has a map fragment and a combo box to select a data set: I then generated a rather large (150+ coordinate) latitude and longitude file with randomly generated latitude and longitude coordinates representing random addresses for infected persons. I did intentionally weight the Green Bay area more heavily so I could show how the weighted center would work. Once I have the data file it is a matter of displaying the convex hull with the hospitals inside of the convex hull: So the convex hull is the usual convex color. I notice that some of the randomly generated latitude and longitude points end up in a lake or other body of water, but I am not going to worry about this because it doesn't affect what the application is trying to do but if I had actual addresses, the convex hull would not have vertices in the water. Inside of the convex hull there are several red markers. Each of these markers represents a hospital. The hospitals are obtained by querying Google Places for hospitals within a certain radius of specific point. I use the max distance from the center of the convex hull (non-weighted center) to the vertex. When you query this way, you are going to get results outside of the convex hull. I do not want this so I simply filter them by checking if the latitude and longitude coordinate for a specific hospital lies within the convex hull. If it doesn't I simply discard the result.' For every marker on the map, I create an entry in a list view underneath the map view. Each entry in the list view has three pieces of information for the hospital (name, address, and distance from the weighted center). The distance from the weighted center is the most important piece of information ranks the hospital in terms of the likelihood of having to care for disease victims. I order my list from the closest to the furthest from the weighted center. If any item is selected from the list I select the marker on the map fragment which will show where it is on the map: With this application now complete, I will go ahead and create the poster for the Research Forum. I will continue to debug and tweak the app but the majority of the work has been done.

-01001101 01101001 01101011 01100101 This week was a bit of rollercoaster. It began with meeting with Dr. McVey to discuss the abstract for the research forum. The research form is coming along. This week I am planning on getting the poster done and sent to Dr. McVey and Dr. Pankratz for some feedback before sending it to get printed for the research forum on April 12.

Walkthroughs were this week as well. On Thursday I walked through what I have so far. When I was putting together the slides for this week I began to think about how the poster is going to be laid out for the research forum. The walkthrough went decent and I feel like going back through and explaining my app I will be in a good place. One piece of feedback that I intend on looking into is the "the disappearing" locations as the map zooms out. I know why it happening because I am using a circle with a radius of 10 meters (to the scale of the map). If the map is zoomed out to show a convex hull with sides that span miles, 10 meters is not going to appear. However, often these convex hulls are smaller so 10 meters might be alright. One possible solution might be scaling the circles based on the area of the convex hull. This week I also decided on an application. For the application I was thinking of creating a hypothetical outbreak of a disease. This outbreak will span several miles and have different density of persons with disease throughout the map. This is how the application will work:

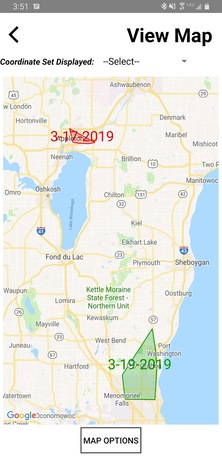

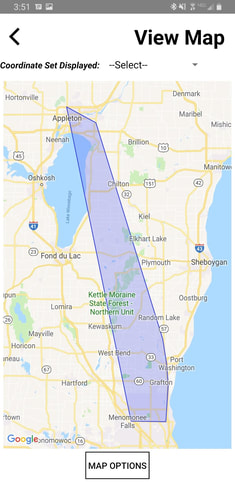

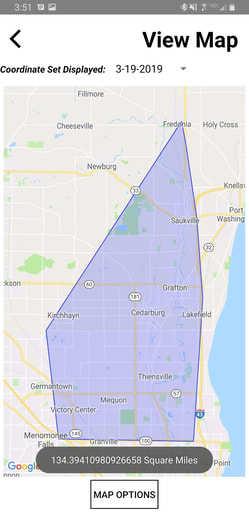

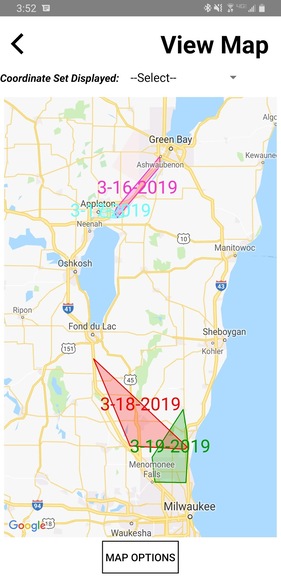

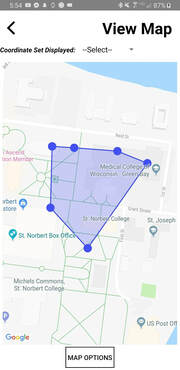

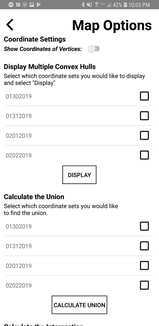

To find the hospitals I will be using Google Places. Google places is an API that works in conjunction with Google Maps to find places of a particular category (fast food, grocery store, church, hospital, etc..) given a particular location. I have the Google Places portion working as I am able to pull in all the places of a given type (i.e. hospital) within a given location. I am currently using my current location to pull in all the hospitals within 10 kilometers (I will eventually use the longest distance from the center of the convex hull to a vertex). I will still need to make sure the found hospitals are within the convex hull but that should not be too difficult. -01001101 01101001 01101011 01100101 I am writing this towards the end of spring break. Last weekend and most of this week was a chance for me to finally catch my breath and to relax for a bit. I got back to working on my app yesterday and have made major strides. The first thing that I implemented was the union of N convex hulls. The union of two convex hulls is not guaranteed to be convex so it is necessary to recompute the convex hull once the points from the two sets has been combined. In comparison to intersection, finding the union of convex hulls is rather easy. Lets go to pen and paper and see what the Union of two convex hulls should look like. Let's say we have these convex hulls (notice the labels placed over them...something I added this time around): Now if we go to pen and paper, the black crossed section represents what the union should be: When the app calculates the union, it gets this: That looks accurate. The second feature I implemented was the area feature. One of the project requirements was to be able to calculate the area of the convex hull. I made it so when the user taps the polygon anywhere besides a point it will display the area of the convex hull. The convex hull's area appears in a Toast message at the bottom of the screen. Here is a screenshot of what the area looks like: Another of the project requirements was to be able to display multiple convex hulls each with a different color. This was working fine last week but it was bugging me that there was no way to tell which convex hull was which besides for the difference and color. Even then, the color had nothing to do with the name of the convex hull. For this reason I decided to label the convex hulls. I implemented a labelling feature for the convex hull when there is more than one. The labels are the same color as the convex hull which it is labeling and will grow and shrink as the map is zoomed in and zoomed out. I use the center of the convex hull that I calculate when I am attempting to center the convex hull when the user selects it to place the label on the map. It often goes outside of the convex hull. This is because the center of the convex hull might not appear to be the center of the convex hull due to the irregular shapes.

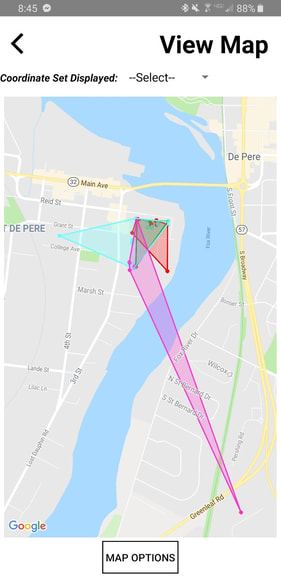

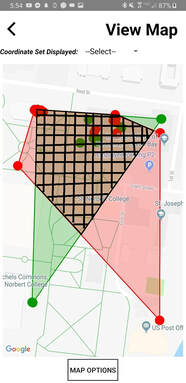

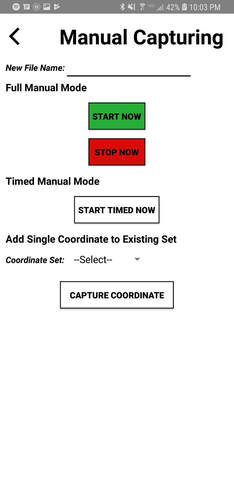

The final thing I accomplished this week was finishing implementing the time system. I had implemented it so that timers would automatically start and end but I also had decided to make it so that the user would be able to start an indefinite recording (it records at a specified interval until they manually stop it), a timed interval that can be started at any time, and a feature where the user could add a single point to a given convex hull. The app is pretty much wrapped up besides for the application. I need to come up with one in the near future so I can have it ready to go for the research forum coming up on April 12. I will need to run some ideas by Dr. Pankratz and Dr. McVey before I start implementing any applications. Overall, I am very happy with where my app stands as of now. It is cleaner than I thought it was going to be at the start and it is snappy. It still has a few bugs to work out but with a bit of time until the Capstone presentations, I have plenty of time to try to resolve these bugs. Now it is time to enjoy some of the warm weather we have been blessed with this week! -01001101 01101001 01101011 01100101 Even though I implemented the algorithm to display several convex hulls at once last week, there was one obvious issue: lack of color. All the convex hulls would be displaying in the same, boring blue. Not only does this make the app seem dry it also makes it hard to see what is with each convex hull. I personally was getting irritated by this and knew that the app would be more user friendly with different colors for each hull. Displaying multiple convex hulls with a different color for each hull was also a project requirement. The implementation of different colors was actually quite simple. When I build my convex hull class I made sure to include the possibility for a convex hull to store which color it was. The only modification to the code that needed to be made was the inclusion of a color file which holds the possible colors for the convex hull. This color file is an xml file and it currently holds nine colors. Thanks to Dr. McVey, the reading in of the colors and storing of them into an array was rather straightforward. Now when I generate the convex hulls to display, I simply assign each one a different color. The array that I place the colors in also holds a boolean which marks whether the color has been used to ensure no color is reused. The colors work very well. Here is a screenshot of when I displayed four different convex hulls. Every convex hull as its own color. The next implementation for the week was a bit more difficult, the intersection. The intersection is difficult simply because we need to find the overlap between the two convex hulls. This is computationally heavy if it isn't done right. Implementing this from scratch would have taken several weeks. Thankfully I found an open source library to help. The JTS Topology suite is a top notch open source external library for Java (and so for Android as well) which enables points to be passed to what it calls a Geometry (think polygon). If there is more than one geometry it is possible to find the intersection between these geometries. This intersection returns the geometry of the actual intersection of the two convex hulls. Alright let's talk about the problems encountered. I store my Latitude-Longitude coordinates in a custom coordinate class that I created. JTS wants to use their coordinate class and so it is necessary to switch my coordinates to JTS coordinates. This isn't bad but it is still a bit of computation time. Once these points are in JTS it is necessary for the polygon to be created. However, it is important to remember that this polygon is a convex hull otherwise the intersection found will not be correct. Thankfully JTS has a function that will turn one of its polygons into a convex hull. Next problem is that JTS will create a in house polygon representing the intersection. This is not a problem until one considers how we will get this polygon from a JTS polygon to our convex hull's polygon so that it can be displayed on the map overlay. I was able to find a way to extract the vertices and so it is possible to construct the intersection and display it on the map. Let's see a few examples of how I am confident that I have successfully implemented the intersection calculation. Let's take these two convex hulls (beautifully distinguishable thanks to the added bit of color): Now let's find the intersection on pen and paper. The region with the black cross pattern indicates what the intersection should be. This is what the app got using JTS's algorithm: Looks accurate to me. I was originally concerned about the lack of coordinates inside of the hull. But upon closer examination of the original display of two convex hulls show that no red points are directly on top of green points. This means that this point should not be in the intersection. Let's do an example with more than two. I implemented it so that my app has the ability to compute the intersection of N convex hulls. Let's take these three convex hulls: Let's go back to pen and paper. The black crossed region again indicates the intersection that we should be expecting. The app computes the following intersection: That is the correct intersection.

This means that I have successfully implemented the intersection requirement for the application. Looking at the Gannt chart would indicate that this should have been done by the end of week 9. This means that I am two weeks ahead of schedule. However, a closer look means that I should also have the union algorithm completed. This should be easy compared to intersections. I decided to do intersection first and plan on getting unions done in this next week. As to area I also believe that this will be easy as the Google Maps API provides a function to calculate the area of a polygon. I also intend to implement this later this week. I am very happy with where my app stands and look forward to attempting to come up with some application that utilizes this apps functionality. -01001101 01101001 01101011 01100101 Well this is interesting. Yesterday I believed that my fix was due to giving the map time to finish loading. Nothing could have been further from the truth. My solution had used one of the most powerful tools in the Android developer toolbox and I had brushed it off and downgraded it's power to a simple wait.

Let's back up for a second. Yesterday's post had me confused as to the real reason why convex hull was not being plotted on the map. It had nothing to do with the map being a 100% loaded. The problem was rather the fact the onMapReady callback function was not being executed on the main thread. The main thread is critical because it is the only thread that can make UI changes. Something like drawing a polygon will only take effect if it was drawn on the UI thread. So the new solution? There is really nothing different from my last solution besides I took away the delay. A handler is a powerful android tool. It is an object that forces the task inside of it's run function to be executed on the main thread. This is crucial anytime UI changes are being done, including tasks such as drawing a polygon on the map. While I thought my initial solution had the answer because of a wait, my initial solution actually had the answer because it was forcing itself to be on the main thread. The only change I made was to remove the delay parameter from the function call. Now the processConvexHull function call is executed on the main thread as soon as the message queue of the main thread receives the message. This is why it is always necessary to understand the implications of the code that you are writing. In other words, read the documentation closely. Had I done this originally I would have known why exactly my solution was working -01001101 01101001 01101011 01100101 I did not get as much work done this weekend as I had hoped. I mostly worked on getting multiple convex hulls to display. I managed to get multiple convex hulls to display. However, I was encountering a weird bug where the camera would zoom to where the convex hull should be but the polygon that represents the convex hull was not being drawn. I spent most of this week attempting to figure this out. I finally figured it out. The problem was the map was "loaded" but not completely loaded. This means that actions such as zoom is permitted but not actions such as placing a convex hull. Once I added a half a second delay to let the map finish loading the convex hull appeared magically.

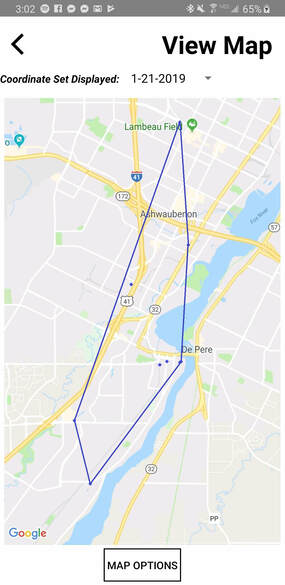

I find that bug fascinated because I was handling everything from within the onMapReady callback function. I really feel like the onMapReady callback should not occur until the map is fully ready...including for placing polygons. I am currently multiple convex hull colors working. This way, when multiple convex hulls are displayed, it is clear as to which convex hull a location belongs too. -01001101 01101001 01101011 01100101 This week was a monster week in terms of moving the app forward. At the start of the week the app was able to pretty much capture locations in the background and write them to the file. The only other thing the app could do was show the current location on the map. Having a bit of free time after my three exams this week allowed me to buckle down and focus on my app. This past week saw a convex hull algorithm researched and implemented, a means of displaying the convex hull on the map, building of a convex hull class and the ability to view the coordinates of any point within the convex hull. Each of these aspects has quite a few moving parts inside of it. Convex Hull AlgorithmOne of my initial concerns at the start of this project was the actual construction of the convex hull. Capturing locations and all the settings in the world would mean nothing without the ability to construct the convex hull out of these points. I needed an algorithm that could take a sorted collection of points that have been read in from a file and then turn this into a convex hull. Early on I decided that I would store the vertices in their own collection to distinguish them from the non-vertice points. After a few hours of googling I fell upon what is known as Andrew's Monotone Chain Convex Hull Algorithm. Don't let the long name intimidate you as this is a pretty simple algorithm. The algorithm constructs the convex hull in three parts. The first part of the construction is sorting the points being considered. The points will be sorted in ascending order. This means that the point with the smallest longitude should be first in the list. If there is a tie on two value's longitude value, the latitude is used. Recall that the latitude-longitude system is nothing more than an XY plane. The longitude is the x value and the latitude is the y value. In more explicit terms, as (x,y) represents a point on an XY plane, (longitude, latitude) represents a point in the latitude-longitude system. For sorting, many options were considered including a heap. However, the most effective method proved to be Java's sort function for an array list. The sort function calls a "compareTo()" function which enables the arraylist to be sorted in whichever way necessary. The second part of the construction is the building of the upper hull. Since the list of points that was sorted has the smallest longitude at the front and the rest of the list in ascending order, the convex hull's construction starts on the far left, with the smallest longitude value. A sub list is created where the vertices for the upper hull will be placed. If there are at least two points in the sublist, the last two points are checked along with the proposed addition to ensure that the three points produce only clockwise turns. If this does not happen, last point of the sublist must be removed and the process must be repeated. This process is repeated until the list of points has been run through until the end. This has only constructed the top half of the hull. The third part does much the same thing as above as it attempts to build the lower hull. The difference is that this part begins at the end of the list of points and works backwards doing the same checks to ensure that only clockwise rotations are being done. Once it gets to the front of the list, the bottom part of the hull has been constructed. Adding the upper and lower hull coordinates will produce all the vertices for the convex hull. The code that was used as a basis for the application can be found here. Here is an visual that I found that helps describe how the algorithm works: Convex Hull ClassThe convex hull class essentially contains two array lists. The first array list holds all the vertices. The points in the list are held in another class that was created which I called coordinates. Coordinates simply contain two doubles, one for latitude and one for longitude. The second array list holds all the points in the convex hull as well as the vertices. The convex hull class has the algorithm for constructing the convex hull Displaying the Convex HullDisplaying the convex hull is where the rubber meets the road. The process begins by building the convex hull using the algorithm described above. Now say that we have a set of captured points that looks like this on the map overlay. The little blue points represent the locations that should be used to construct the convex hull. Now let's go to pencil and paper. If we were to construct the convex hull manually, the points should look like this: Now what did the app do? This is what the app did: The visual is created in three layers. The first layer is what is known as a polygon. It takes n vertices as input and constructs a polygon out of it. For this I passed the vertices calculated internally in the convex hull class. Notice that there are "dots" still on the map. Every coordinate, vertice or otherwise, retains its dot and is added as the second layer. The coordinates are the second layer to enable onclick handling. I enhanced the experience by enabling the user to select any coordinate from the convex hull's set, vertice or otherwise, and the app will display it's longitude and latitude: Overall, this was huge week for capstone. If one refers to my Gantt chart, I was not expecting to be at this stage until the end of week 8. This is good because it gives me time to refine the process and add more features.

-01001101 01101001 01101011 01100101 Well this post is not what I had hoped to be posting about. Due to an exam last week and 3 exams to start this coming week, I have not been able to put much work into the project. On Monday I talked to Dr. McVey about a recurring issue where the app would crash sometimes while attempting to pull in the most recent location. This was due to the accuracy of the location request not being able to be within the defined accuracy. The app would crash consistently if the location was requested while driving on the freeway. For this reason, the location is now captured using what is known as a LocationRequest. Unlike the old approach which would freeze the UI and request locations on a loop until it was within the defined accuracy, the LocationRequest asks for the location and the request is performed on a different thread. Once the location has been pulled in it will call a callback function. The app has yet to crash and the location is usually decently accurate except for on the freeway where it has a tendency to be off to the side of the highway, oftentimes at a place of business typically found on Google Maps. After Tuesday I hope that I can button down and crank out the building of the convex hulls from the location data. -01001101 01101001 01101011 01100101 Perhaps a bit overdue, but the first thing that was done this week was the saving of setting choices to a shared preference. This means that when a user selects the interval in which an app will capture information, it will be saved to what android calls a "SharedPreference." A shared preference is essentially a small file that can be used to save small amounts of data such as user preferences.

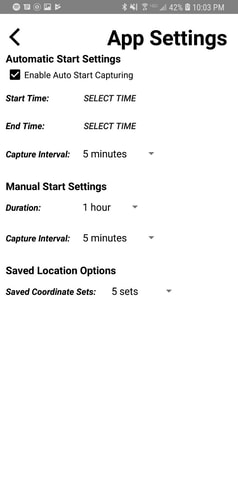

The second feature I implemented is a timer system. The timer system is currently only implemented for the automatic start location capture. This means that the app will start to record locations at the specified time and continue to record locations at the given interval until the specified end time has elapsed. The feature works great and has always gone off at the correct time. In conjunction with the timer system, I made it so the timer system captures the location at the end of an interval and places the latitude and longitude of the location into a text file. Looking at the file after a period of recording showed that the latitude and longitude have been written correctly. This effectively means that the coordinate sets are capable of being recorded. This is the first major milestone. All this progress was not without some growing pains. I was originally using Android's recommended FusedLocationProviderClient interface. This was working great initially because it appeared to capture the correct coordinates. However, I then discovered a problem where if the user had not turned on the phone for the entire length of the interval, no apps had updated the location. The problem with FusedLocationProviderClient is that it does not update the location itself but requests the last recorded position. This is usually fine for applications that are not attempting to run in the background because information such as location are updated fairly frequently when the screen is on. But to get the location in the background was a bit of an adventure. I ended up deciding to access the network and GPS components of the device manually and requesting an update to the coordinates. This requires using what is known as a LocationManager object. Something I learned the hard way is that when a device first requests a location, it needs to triangulate. This means the LocationManager object needs some time to get the location accurate. Android conveniently allows the app to check the accuracy of the location and wait for the location to get more accurate. I wait for the location to be within 25 meters of accuracy. That does it for progress this week. I am still on pace to make the milestones for Coordinate Sets and Capture option implementation. -01001101 01101001 01101011 01100101 This is not really a blog but more of a side note. As of today I have added the Gantt and PERT charts to the Project Description page. Check it out to see my plan of attack for getting this app ready for presentation day.

-01001101 01101001 01101011 01100101 It has only been a few days since my last post but I figured that I would begin the habit of posting every Sunday night. No major changes have occurred since my last post. I implemented a rough draft of location capture. This required requesting permission from the user to capture the location via the GPS sensor in the phone. I decided to go against the Google Map API's default marker as this does not scale based on the zoom. This would make it hard to see the convex hull as the map markers would cluster together and distort the shape of the polygon. Instead I have decided to use what is known as a GroundOverlay. Part of Google Map's default API, GroundOverlay is better suited for my app as it supports auto zoom. Google's official documentation can be found here. The GroundOverlay allows the app to use whichever image to represent the overlay, as of now I am using a default location marker although this will likely change to a standard dot, much like Google Maps uses in its Android application: In terms of what my application looks like currently, this is what it looks like when I open up the Map activity: It first finds the current location and then marks this on the map using a GroundOverlay object. The big thing is when the user zooms in on the application, the GroundOverlay "marker" will adjust based on the zoom: If the user zooms in on the same location above, the marker will also "zoom" in: The other thing that I have done in the past couple days is look into algorithms for creating a convex hull out of points. I still need to do more research on this before I can describe a few of these. My next step will be to save the settings to a file and to set up the auto capture of the coordinates.

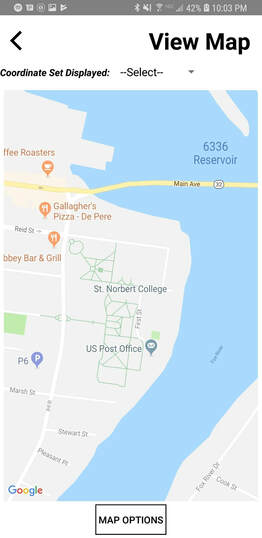

-01001101 01101001 01101011 01100101 As the temperature continued to fall outside, I began to put together the finishing touches to my UI. Building the UI before implementing logic helps me to sort out what I need to accomplish and how I plan on accomplishing this. No part of the UI I just "completed" is set in stone and it is likely that this will change as the logic behind the scenes is implemented. First what exactly is the UI meant to do? The UI is meant to enable users to capture GPS coordinates, store them, and then view the convex hull of these data sets on a map overlay. Additionally the app should be able to calculate the intersection and union of selected sets and calculate the area of the convex hull. Think of a convex hull as a collection of points that is within a polygon so that a line can be drawn between any two points without any part of that line leaving the polygon. Imagine these points as tacks in a bulletin board. Now a convex hull would be the same as taking a rubber band and placing it around the outer tacks. That is all a convex hull is. Here is an image to help clarify: Now that math class is over for the day let's get into what I have accomplished so far. I have decided to build the app for Android. Based on this decision, Android Studio has been updated and a project has been created in it. I have also decided to create an online GitHub repository to serve as version control and a backup in case anything happens to my computer. The repository can be found here. (NOTE: This will remain private until after presentations. I am more than happy to show people how I did something but I do not want someone to copy parts of my app.) Following are some screenshots of where my application is at in terms of UI. This is the main menu. Nothing special as it just serves as a means to get to other parts of the application. It sums up the major capabilities of the app: capturing locations (including an automatic start option), viewing the locations as a convex hull on a map, and a few utilities. If the user selects Manual Location Capture it will bring them to this page: The manual capturing screen essentially has 3 functions. The first function is to enable the user to start a location capture period at any time and to end it whenever they desire. This is the Full Manual Mode. The interval of location capture for this mode is defined in settings. Having the ability to start a location capture period and have it end after a specified amount of time is the second option which is labeled Timed Manual Mode. This means the user can start to capture locations now and the app will stop after the specified amount of time. Both the duration of this capture period and the frequency of location captures are defined in the settings screen. The last manual mode on this page is the Add Single Coordinate to Existing Set option which enables the user to capture a single location and add it to an existing coordinate set. The settings page allows a few odds and ends to be set by the user. Automatic Start Settings is the most important section on this page as it allows users to define a start time and an end time for the app to start capturing locations on a daily basis. This means the user doesn't ever have to remember to start recording locations. The user can also set the interval in which these locations will capture. Manual Start Settings is where the user will be able to set how long the "timed" captures will last for as well as the location capture interval for both the fully manual captures as well as the timed manual captures. Finally, Saved Location Options will enabled the user to determine how many coordinate sets should be kept on their device. I have given them a range from 5 to 100. The most visually exciting page, this page enables the user to view a convex hull on a map overlay. I have decided to implement a Google Map "fragment" into the UI so that users have the ability to zoom and scroll to view the points however they like. How easy it will be to build convex hulls in google maps is yet to be determined. Right above the map the user has the ability to select which convex hull they wish to view on the map. The user can also view a few more map options by selecting the "Map Options" button on the bottom of the screen. It will bring them to this page: This page is a scrolling page so not everything fit on this single screenshot. This page gives the user ability to select several coordinate sets and then display these as convex hulls on the map. Calculating the union or intersection of the coordinate sets the user selects can also be accomplished on this page. The option to calculate the area of a convex hull is also on this page. Finally for a bit of aesthetic appeal I decided to develop a custom logo for the application. This does not really affect anything but I think it looks cool on the screen.

There was a lot information packed into the post but several initial decisions have been made. Keep in mind, although the UI is fully functional in terms of navigating through pages and setting options that is all the app does now. My next step is to implement the location capturing. If you have any questions please feel free to comment. I plan on periodically checking the comments on this page -01001101 01101001 01101011 01100101 |

|