Blog Posts

4/16/2024

When I first made this program I only relied on x coordinates. As I continued to build this software I added some y coordinate checks, but this was still minimal compared to the overwhelming number of computations and checks based on the x coordinates. I thought this was going to give me trouble in videos with more vertical movement, but surprisingly it didn’t. I was forced to implement a check in my BestFitPlayer function to include some y filtering, but this was very minimal. I asked Dr. McVey if I should spend the days required to implement additional y coordinate checking and cleaning, but with the program running so well with the correct videos we decided not to. This is because it would exponentially increase the complexity of my BestFitPlayer function and there is no guarantee that it would help anything at all considering things are working well as it is.

During my testing of various videos I have discovered that my code doesn’t work perfectly “right out of the box.” There is some trial and error when it comes to configuring the frame resize, MAX_DISTANCE (number of coordinates a player is able to move per frame), and potentially other small things. This is expected considering no two videos are the same. I will make sure to document these discoveries for future use of this code. To my furthest knowledge my code works effectively when manipulating these configurations and combined with the correct video parameters.

Now I am cleaning up my code, updating my website, finding more videos that work well, overall testing of code and UI, and planning for my presentation.

4/13/2024

Stopped using the EDT:

I have stopped using the Euclidean Distance Tracker (EDT) to assign coordinates. The EDT wasn’t working well (adding too many ids / wasn’t maintaining ids throughout the video). This was a problem because in a 29 second video there were close to 70 ids assigned to the 5 players. This made it hard to know if my cleaning data code was doing an accurate job. Instead of feeding the center coordinates from openCV to the EDT, I now send the center coordinates to my own code to assign ids. This code is almost exactly the same as my clean data file code base. This uses a player dictionary of lists to assign the ids based on which list the coordinates are added to on the fly. This actually works well and doesn’t slow things down too much. To clarify, it wouldn’t matter if it did slow things down because the coordinates with the updated ids will still need to be cleansed (this is not the final product). Since I will still send the coordinates with the new ids to a file to clean, I don't need perfection, I just need to limit the number of useless ids assigned. Now in the 29 second video only 7 ids are assigned to the 5 players (I’m very happy about that).Updated plan for cleaning datafile code:

This code will take the coordinates with the updated ids and make sure all coordinates are correctly assigned to the correct player. I can now trust the ids more now since there is a much higher level of accuracy to them. This means I have hope that I can more accurately assign coordinates to the correct player.Path in GUI:

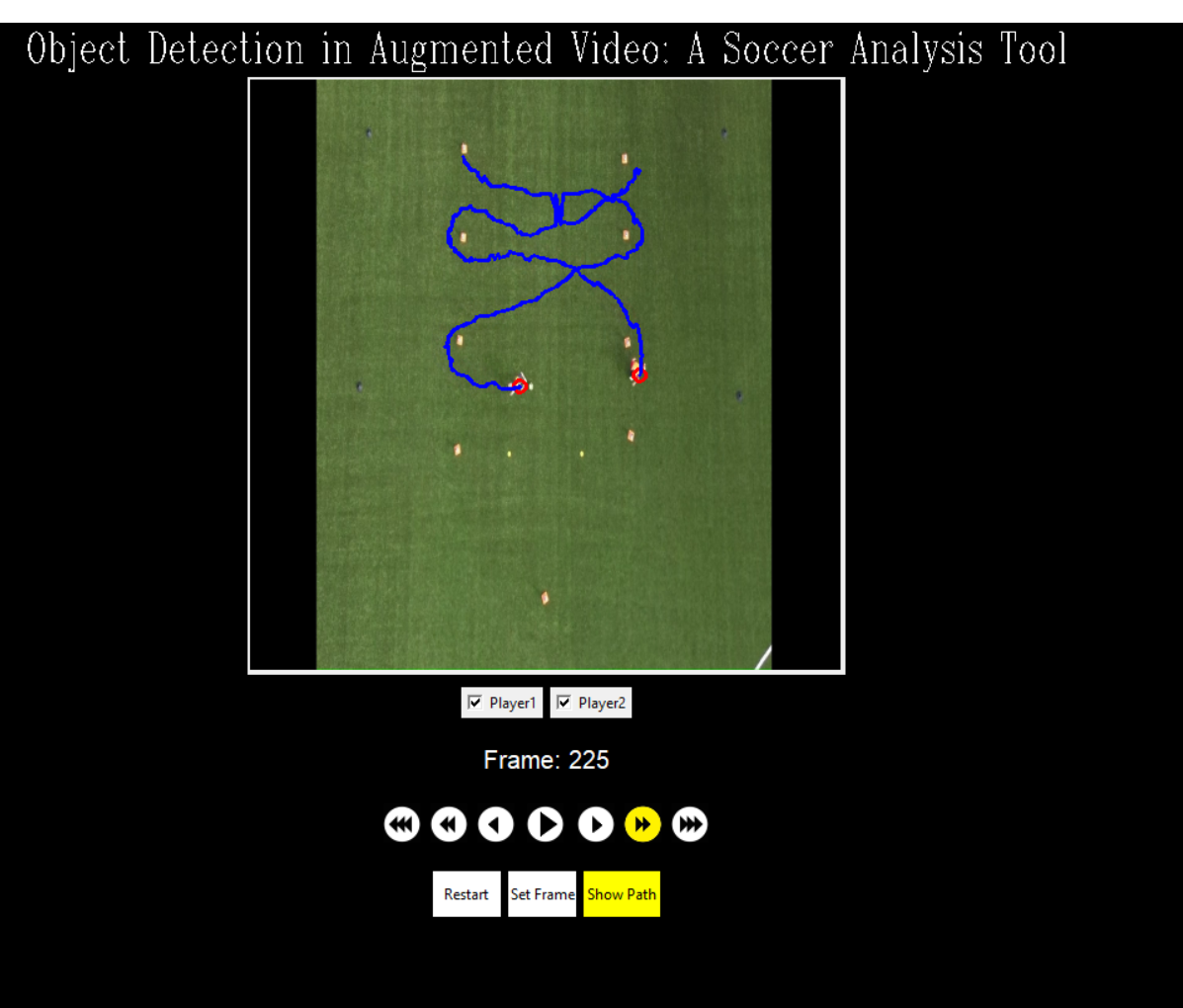

I have implemented a way to show the path in which the player moves in my GUI. I also have a button that allows users to toggle between showing the path of the player(s) being tracked and not showing the path.4/3/2024

Shows the video in a centered box

Fast forward, normal speed, slow-mo in forward and reverse

Checkboxes for all the players from datafile

Counter showing the current frame number

Restart video button

Set frame button that only allows valid input

The beginnings of video tracking and augmentation

This GUI works really well because I was able to find a way to put all of the video frames in a list. This allows me to loop through forward and backward and jump to any frame I want to. I also have the player_dict that stores all of the players coordinates at every frame. I have added a BackFill function to my clean_datafile.py that fills in all of the frame coordinates to all players even when they aren’t moving. This means that my player lists in the player_dict are exactly the same size as the list of video frames. I use a frame_count that increments every frame. This means that I can use frame_count as an index from the frame list and access my player lists at frame_count index to find the corresponding coordinates at that exact frame count.

Things to add to GUI:

I will want to add a help button that describes how to use the GUI and any other important information that the user may need to know. I am also considering adding a “show id” button that when clicked shows all player ids.3/26/2024

In addition to those problems, I am looking into a way to create the augmentation and the bounding boxes around the players that are being tracked. Dr. McVey and I are thinking this will be a layer on top of the video that uses the datafile’s cleaned coordinates to box the tracked players and a line that follows their path. I don’t know how this is going to work, but I do know that I will have a datafile with players coordinates. This means I will need this layer to read from this file and then augment appropriately. I also don’t know if this layer will be able to provide the UI or if I will need something else to do the UI.

3/23/2024

Player is moving right to left (assigned a direction of -1):

Player stops moving and changes his direction (now direction should be 1):

Player begins to move and starts to be tracked again:

Player change of direction isn't picked up so these coordinates won't be added to player:

3/23/2024

Note:

Even though I am not using the ids to help assign coordinates I am still using the ids for debugging purposes. They make it easier for me to see what is happening on the backend of the program.3/19/2024

Updating frames for all players:

I have fixed the player dictionary so that all players that are already found are updated, regardless if their player list is being updated. This means that all players are up to date with the most recent frame number at all times. This does not mean that all player lists are the same size. This is because I don’t fill in the coordinates of a player before they start initially moving (this is because who cares, they weren’t discovered yet). I accomplished this by making an InitializePlayer function that adds a spot in each player list that inherits the previous entries player data. Then when DeterminePlayer determines who the coordinates belong to it replaces the last entry in that player’s list with the updated coords (if player doesn’t exist then it just adds to a new list).Interpolation addition:

I have completed an interpolating function that interpolates coordinates when there is a gap in the tracking of a player. This is used so that if there is a gap between coordinates (entries that are all the same) it fills in the estimated movement of the player so the coordinates don’t have a “jumping” / “teleporting” effect. This function works very well.BestFitPlayer update:

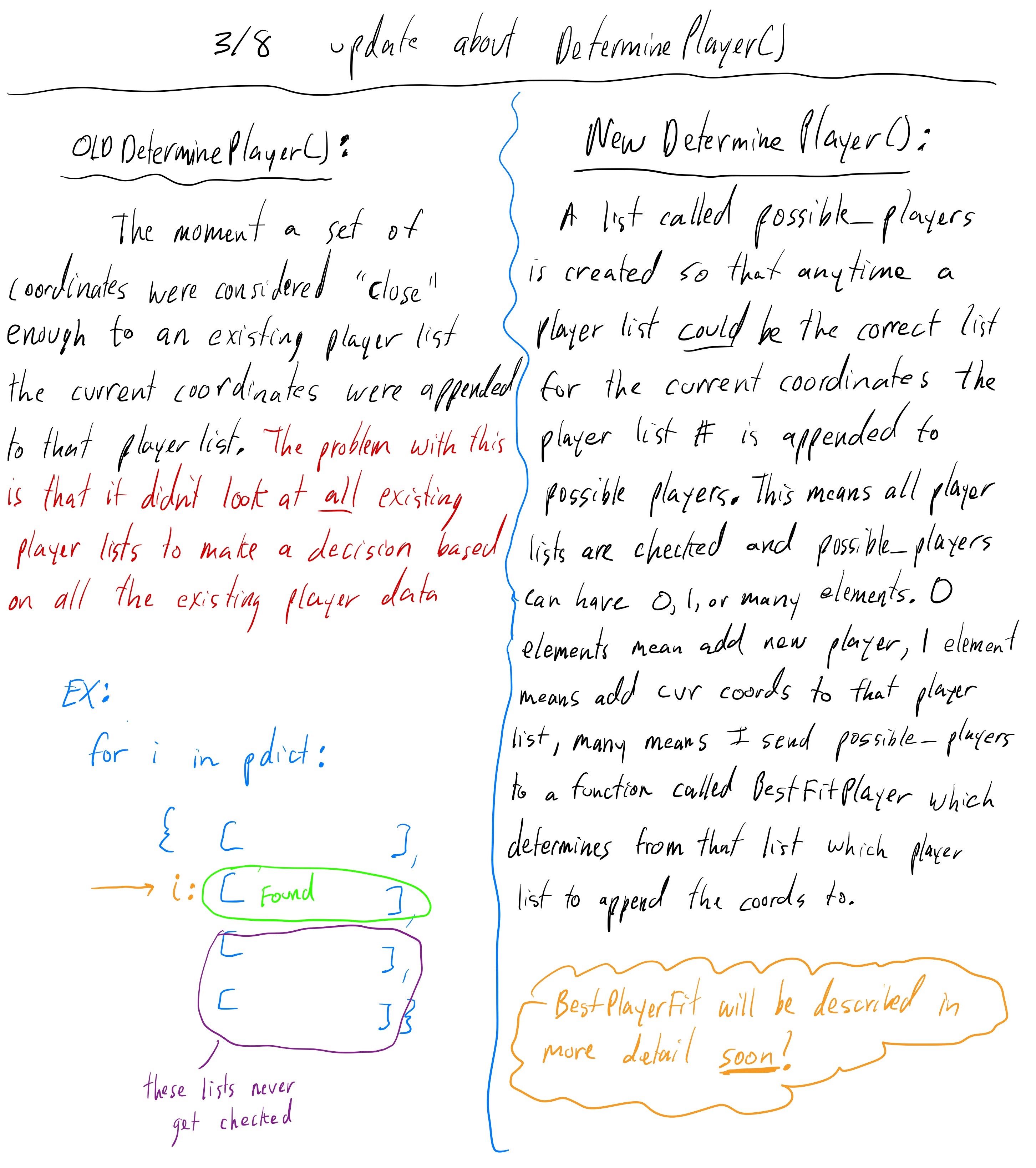

The function BestFitPlayer previously used slope to determine the correct player. This wasn’t effective because of the inaccuracy of openCV. A player’s slope was typically incorrect because of the boxing that openCV produces bouncing around randomly. I explain this as the slope was too precise for a non precise tracking software. Instead, now I determine the path that a player is moving from the last time they were being tracked. This is an important distinction because I don’t want to know what the last 10 frames of a player are, I want to know the last 10 frames that a player was moving (this helps me better understand how they are moving). There are times where openCV coordinates cut out and show that a player is static when they are actually moving. By using the last time that a player moves I can avoid this error. A player will be given a 1, -1, or 0 which identifies moving right, left, or static, respectively. If a player is moving in a specific direction and the coordinates are in the path of that direction then I consider this player a possible candidate for the coordinates. If there is only one player that is possible I know that this is the correct player and I append the coordinates to that player. As of right now I don’t have a good way to decide where the coordinates belong if there are 2 or more possible players for a set of coordinates. More to come on that.3/8/2024

An initial concern I had with this idea is what if the player is moving irregularly and I make a guess that is incorrect and the bounding boxes on the player are way off. DCP assured me this won’t be a big problem because even though there may be a 20-40 frame gap in the tracker, this is only about half a second in real time. This means that the player physically couldn’t move that much in that time. Which means my guesses at their location in this gap may almost be imperceptible.

3/8/2024

3/8/2024

3/8/2024

3/1/2024

2/29/2024

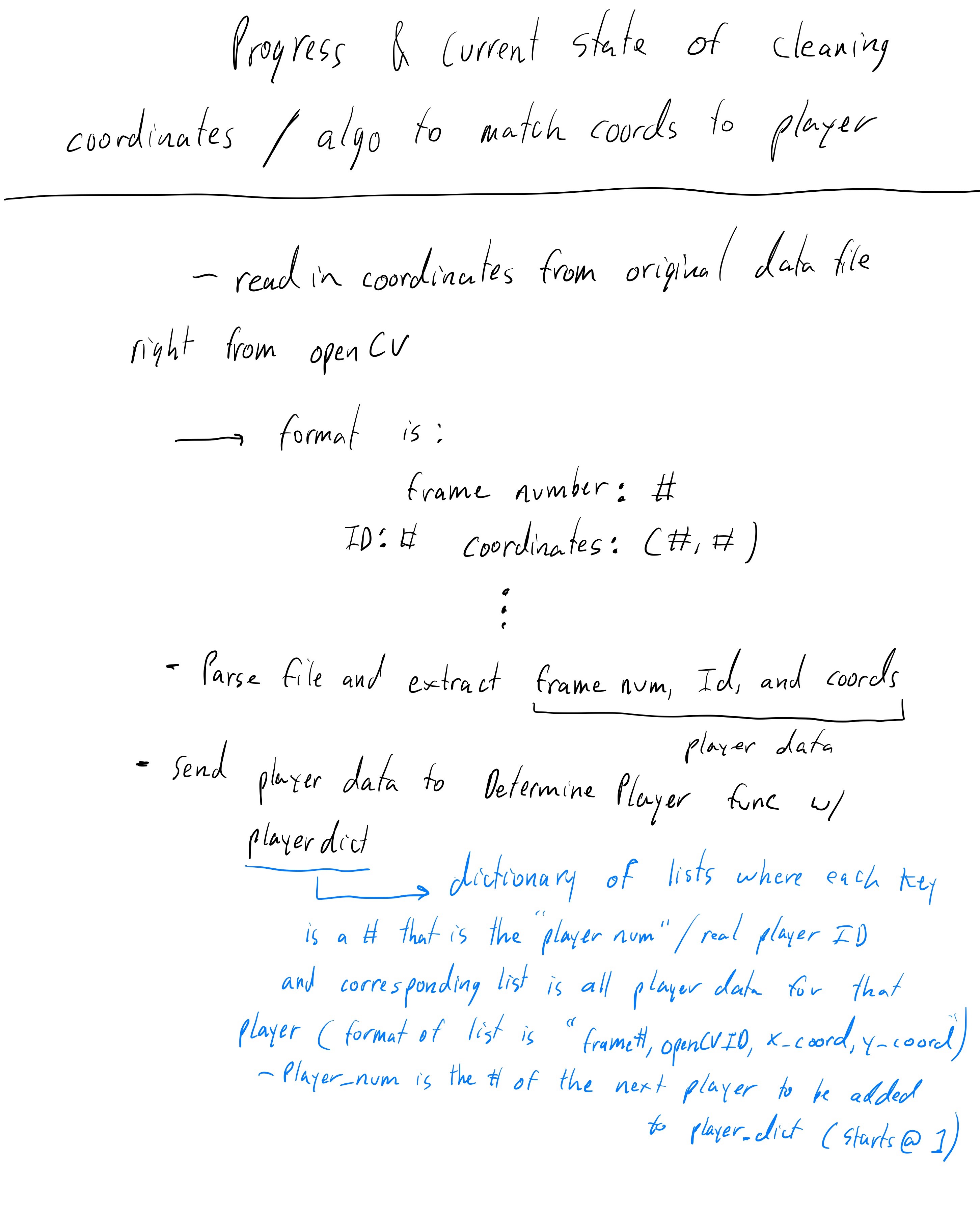

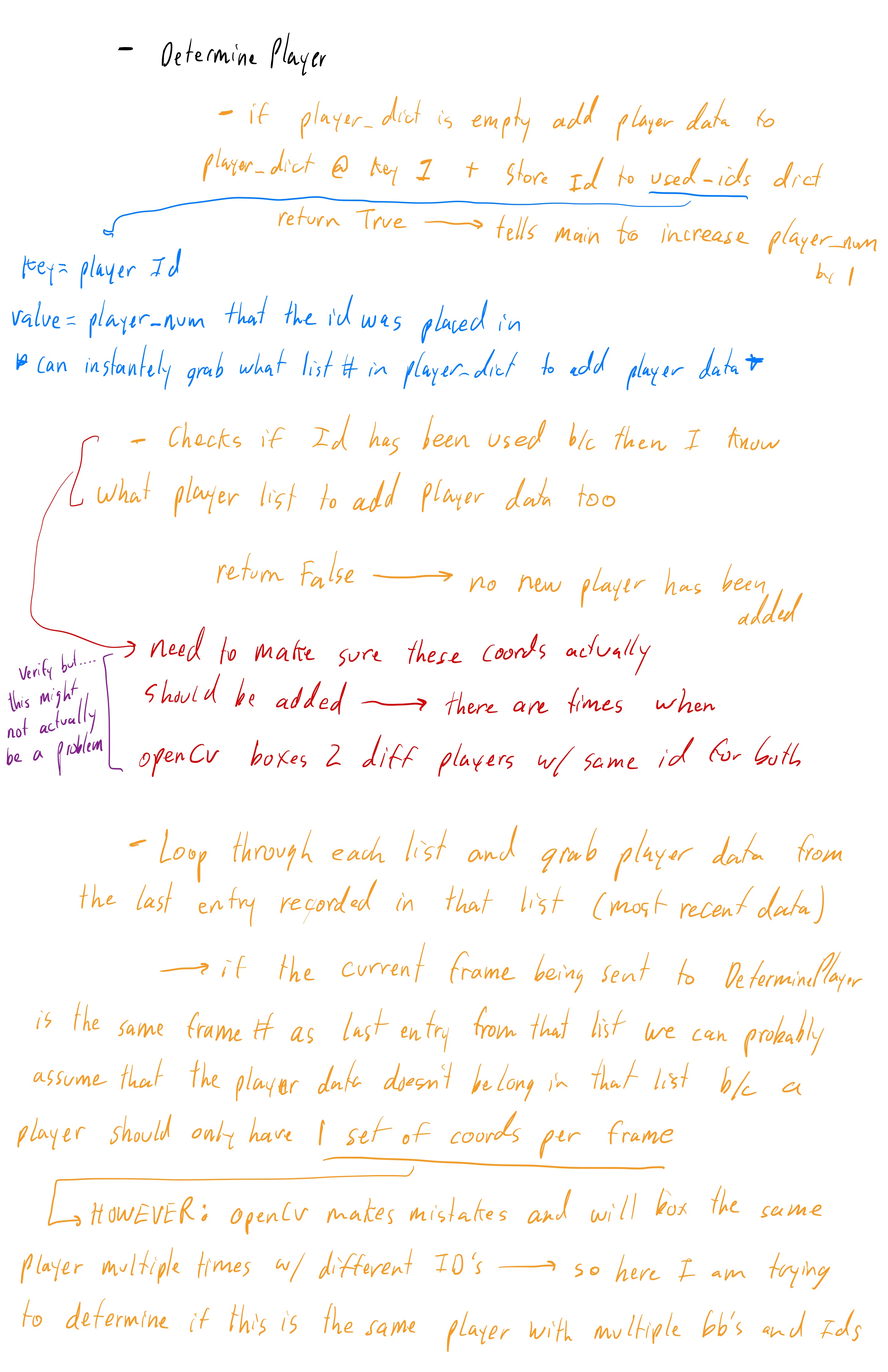

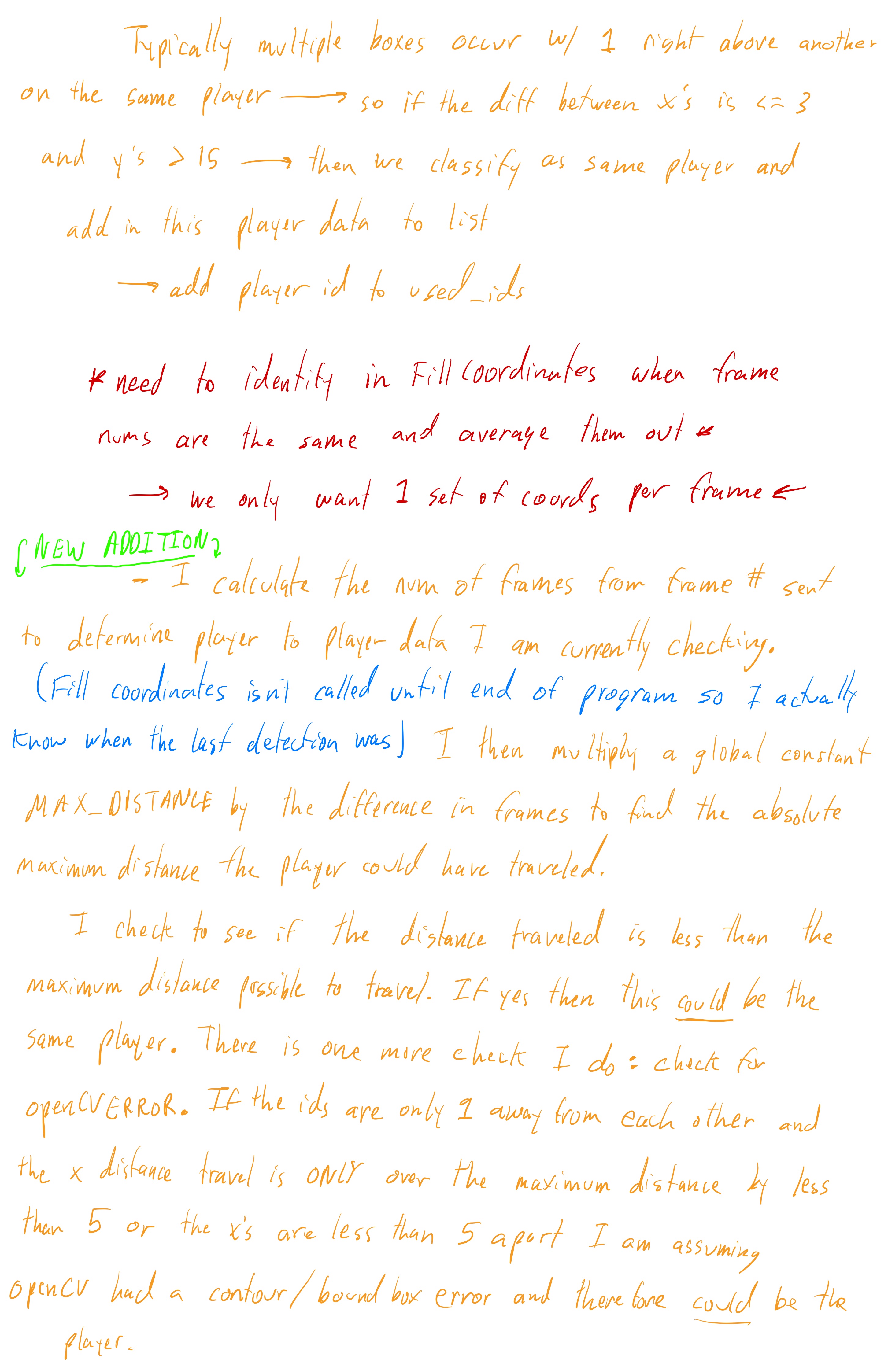

Documentation of clean_datafile.py

2/19/2024

2/14/2024

Possible solution to too many contour lines (over identifying one object):

I found some code on the internet that uses an agglomerative clustering algorithm to determine if the contours bounding boxes are too close to each other and then merges them together. This essentially should combine all contour bounding boxes on each player to only one box around each player. The problem with this method is that it's super slow currently and is crashing the program. I will be further investigating this algorithm because I think this could be a fantastic solution to my problem.See bounding boxes before and after the agglomerative clustering algorithm’s implementation:

Before

After

2/14/2024

Route 1:

Play the video with a player selected to track. If the tracker starts to track a wrong player, freeze the video and reselect the correct player. This will prevent the data file from receiving incorrect player coordinates. This step is clearly a preprocessing step that requires human interaction and correction (I don’t love this option because of that).Route 2:

I prefer this option because it involves no human interaction and correction. The idea is to track all players coordinates and store them in a datafile. Then the user will select a player to track and as the tracker is tracking the player that the user selected the program will be monitoring the coordinates of the selected player to make sure that the coordinates are consistent with what's already stored in the datafile. Since we have data on all of the players movements I will be able to identify when the tracker starts tracking the wrong player and make the necessary corrections. This would be awesome!2/10/2024

At the moment I have decided to go with the cv2.TrackerCSRT_create() since it tracks the player pretty well and allows the user to pick what player to track (only one at the moment). I noticed that sometimes when players overlap the tracker picks up the wrong player and begins to track them. I would like to eliminate this problem the best I can. At the moment I believe the best way to solve this problem is to calculate the players coordinates on the screen at each frame and then store these coordinates in a datafile. The reason for this is so I can clean the data and eventually make judgments to help the tracker make accurate decisions. It should be known that by doing this I am subjecting the project to require a preprocessing step (not on the fly!).

Start augmenting video:

Dr. McVey suggested that I begin playing around with the video augmentation part of the project. Eventually I want the program to track the path of the players. I have found a method of the openCV library that draws a line based on coordinates. I currently have found a way to draw a line from a player's starting position to their current position. This isn’t exactly following a player path, but it's a starting point. I will need to find a way to analyze the coordinates in the data file and then draw the path of a player

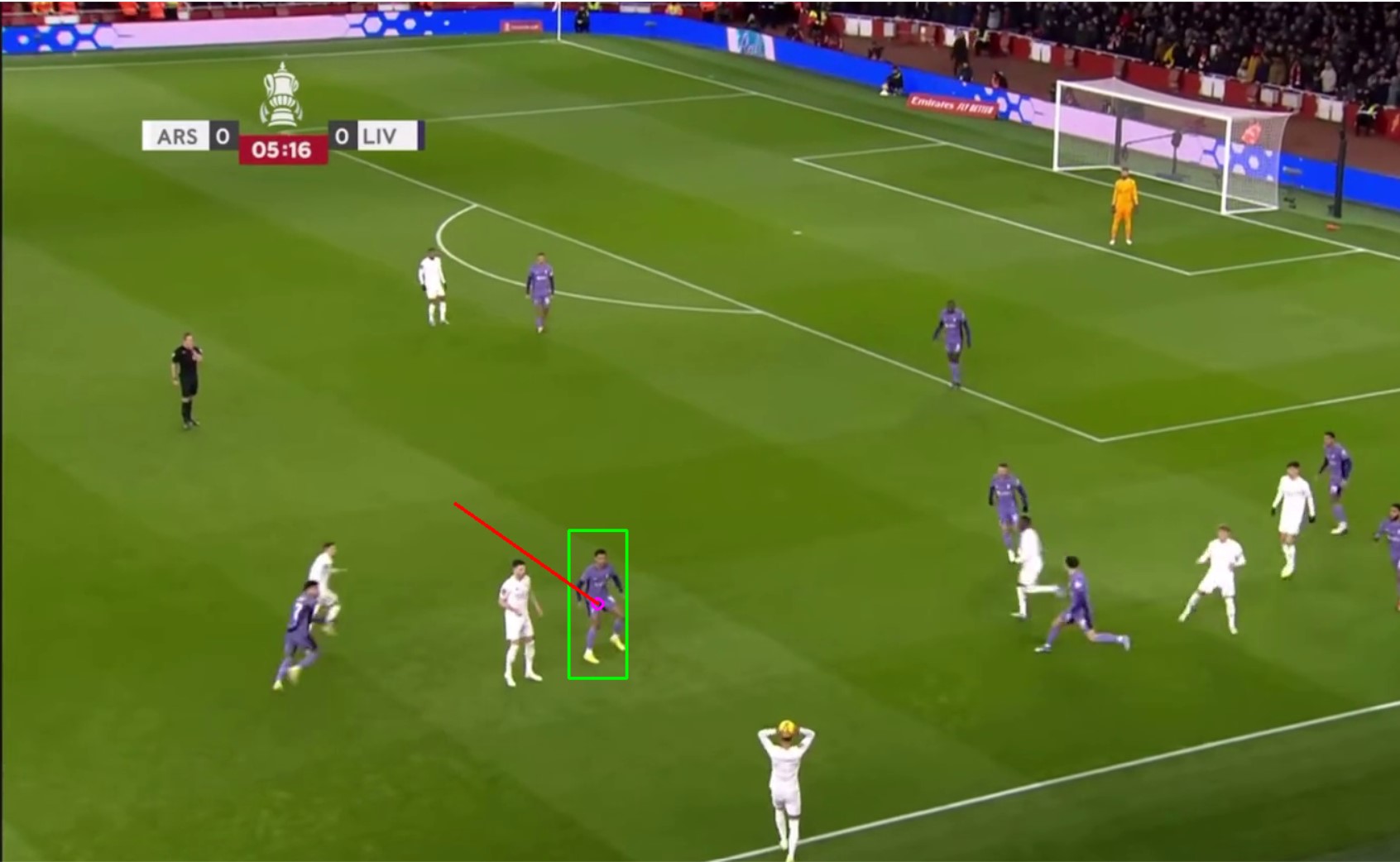

Static Camera reminder:

This is a perfect example of why a moving camera causes problems. A player's coordinates are based on their exact position on the frame, if the camera moves now the same coordinates are now in a different location. A static camera seems necessary.

2/9/2024

2/7/2024

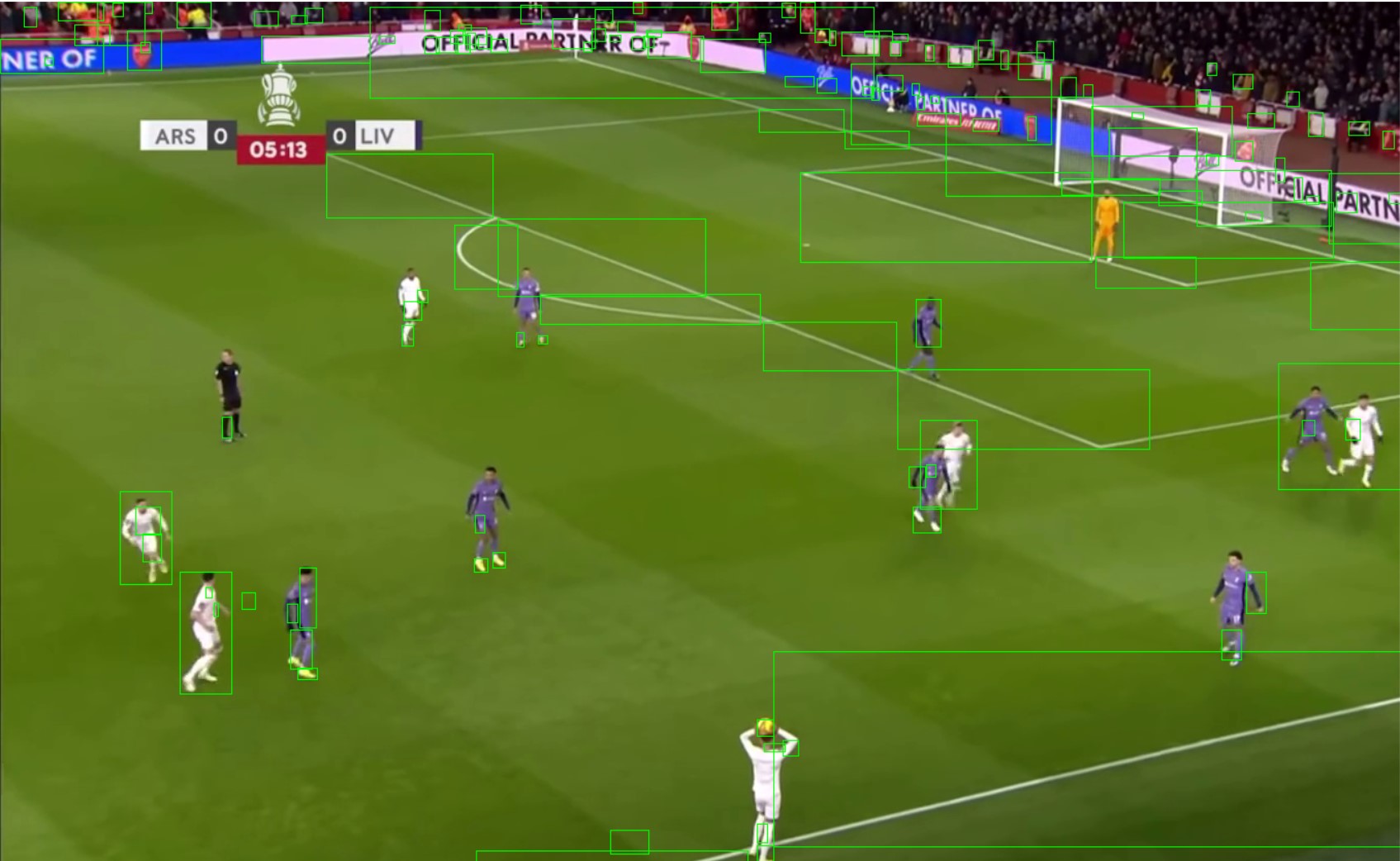

cv2.createBackgroundSubtractorMOG2():

Background subtraction is an image processing technique that allows object detection and tracking. It extracts the foreground from a video in order to identify objects in the frame. This method colors objects white while the background is set to black. Using this method I would grab the contours from the mask (white and black frame created from cv2.createBackgroundSubtractorMOG2 ) and then draw boxes around the contours to show the objects in the frame. The big problem with this background subtraction is the over detection. Many objects in the frame that I do not want to track are getting boxes drawn around them. The main reason that there are huge contours on the field with no players in them is because as the camera moves and zooms openCV picks up the lines on the field and thinks they are moving objects.

cv2.HOGDescriptor():

The “HOG” (Histogram of oriented gradients) is used to track/detect humans in an image or video. This method works really well at identifying the players on the field, but it runs extremely slowly. I am currently not sure if the performance is something I can improve with a different language (C++) or some other way.

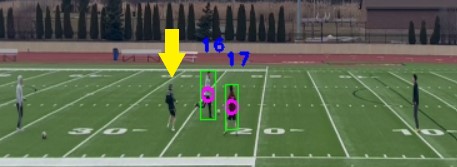

YOLOv8:

YOLOv8 is an object detection and tracking model that I have come across while researching. I actually want to avoid using it because I fear that it will overcomplicate the process and be overkill for this specific process. I have a feeling that a pre-trained model like YOLO will run super slow and not be worth it for what I am trying to do. The reason that this “route” is even being considered is because I’m desperately searching for a way to detect the players on the field and the ball. I think that YOLO has the capability to do this accurately, but seems to be at a high cost. If I were to use this method I would use it to detect objects on the field and then filter out all objects other than players and the ball.

cv2.TrackerCSRT_create():

This tracker works by creating a bounding box to identify a region of interest (ROI). This is a good thing because a part of my project requirement is for the user to choose which players to track. This would kill two birds with one stone (track players and build in GUI to draw a box around desired player to track). Maybe this method seems like a no-brainer, however, I worry about what specifically is taking place behind the scenes. The openCV documentation is vague at best, which worries me. I want to know what is happening on the backend to avoid future troubles. At the end of the day this tracker works very well.