5/11/2021

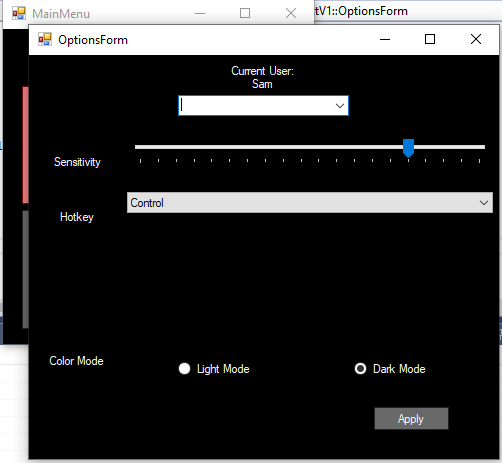

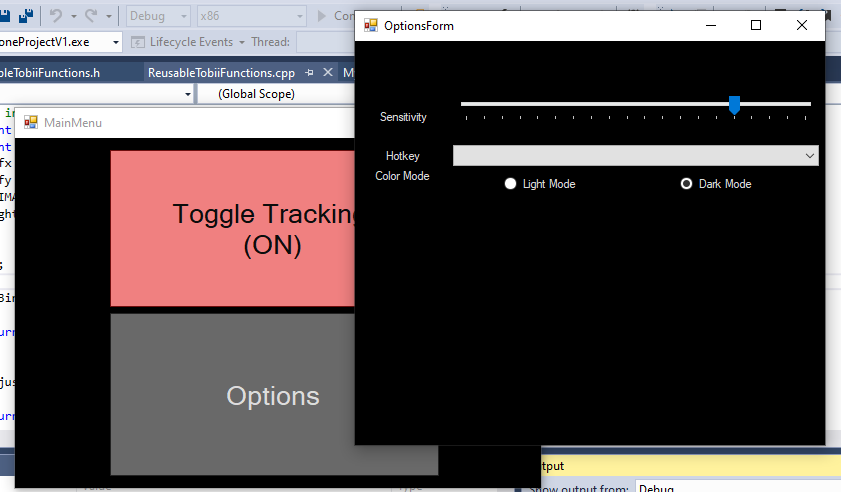

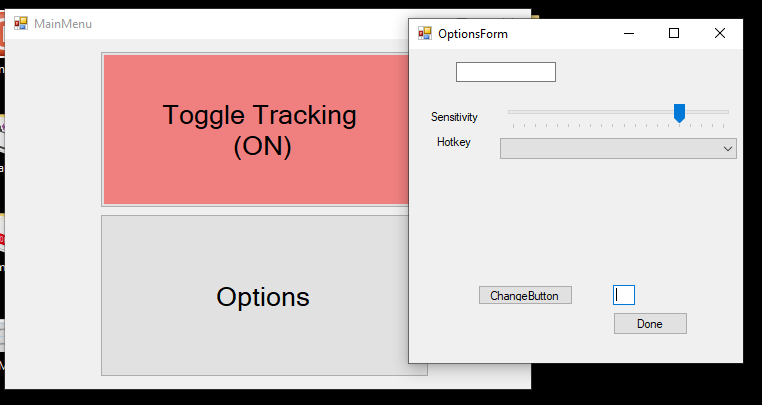

Well, this is it. This is the last blog post. A lot has happened since my most recent blog post. For one, I have completed my project. All of the functionality that I wanted to get into it is implemented. There is of course still more that I could do if I were to continue working on this project, but that will always be the case. Secondly, I had my actual presentation of my project. I thought that it went very well! My classmates were very supportive as were the faculty and I got a lot of good questions and kind words of encouragement from everyone.

I don’t have much more to say. This capstone class has probably been my favorite course I have ever taken. The opportunity to build an application (and an interesting one for that matter) from the ground up by myself was awesome. The support that I had not only from my peers but also from the incredible faculty was amazing. Special shoutout to Dr. Pankratz for his incredible Mona Lisa and his interest in my progress, to Dr. Diederich for being the one who suggested I get this project and helping me throughout, and of course to Dr. McVey for being an unending well of support and knowledge. Thanks for everything, I can’t wait to see what comes next.