This past weekend my classmates and I presented our capstone projects. I really enjoyed seeing all of the unique projects in their final form. I presented my project Saturday at noon. It went extremely well! I felt calm and relaxed while presenting, and during my live demo of the game I had lots of fun. I’m both glad and sad that it is over. Speaking of being over, this will be my last blog post. If you wish to download my project and/or slide deck for my presentation, I will have downloadable links available on the project files page of this website. In terms of my future, I plan on entering the workforce immediately after graduation. I wish to obtain a job in software development in the Stevens Point, WI area.

In the past few days I have been working on two things: preparing my presentation for tomorrow and beatifying my game. Since my last blog post I have done numerous things to improve the visual aspect of my game. This includes:

• Creating a boxing ring for the user to stand on

• Applying brick wall textures

• Applying my logo as a texture for the ceiling

• Importing low-polygon punching bags from the Unity Asset Store (thanks to my classmate Myles for reminding me that the asset store exists!)

The video below showcases all of these changes I have made. I made sure to hide the front ropes of the boxing ring and the front punching bags whenever a menu is present. This is to ensure an unobstructed UI experience. Another cool feature is that whenever a cube is hit with the correct arm a small amount of force is applied to every punching bag. You’ll see in the video how much they move when I start hitting the blocks. This wasn’t difficult to implement, and I am incredibly satisfied with the result. Today’s song choice is Stayin’ Alive by the Bee Gees. Enjoy!

Much like Another One Bites The Dust, Stayin’ Alive has a prominent beat (CPR training, anyone?), which allows my beat detection algorithm to work well on it. Similarly, the rhythm (vocals and guitar) is often detected as well. I’m not going to lie, I’m having a blast playing these songs! The room I will be presenting in tomorrow is large and spacious, and with the room’s sound system I am going to have so much fun presenting. I am a little nervous, but I am definitely ready and eager to show off my project!

Last week I met with Dr. Pankratz to discuss beat detection again. He looked at some of the code I had and what my beat detection algorithm did. When running the code in game, it spit out way too many beats, so we inferred that my beat thresholding (what constitutes a beat) must have been the main issue. Turns out we were right. After adjusting this, along with some other minor things, my beat detection algorithm now works (and surprisingly well, I might add)! While I was adjusting the algorithm, I also implemented .wav, .ogg, and .aiff song support since Unity supports these audio formats. Anyway, here’s a demonstration of Another One Bites The Dust by Queen (At the beginning of the video I was switching between BPM Mode and Beat Detection to show that the settings for BPM Mode are preserved when switching).

As you can see, it works very well! It detects the beat with ease and also detects prominent rhythms as well. I am extremely happy with the result, and a huge weight has been lifted off of my shoulders. Now all I need to do is prepare my presentation for my time slot on Saturday.

Every year St. Norbert College hosts the Undergraduate Research Forum, an event where students can show off undergraduate research, scholarships, and creative activities. For this event, I created a presentation and gave a demonstration of my project so far (down below). I am glad I was able to participate this year, as I think my project is cool to show off. Also, this was good practice for my final capstone presentation next weekend.

Capstone walkthroughs were a couple of weeks ago, and I thought mine went pretty well. I obtained valuable feedback and have made lots of changes since my last blog post! In the past two weeks I have made my game less basic-looking by adding light emission to the cubes, as well as particle effects and controller vibration when they are destroyed (visual and haptic feedback). I also used free concrete and neon textures for the floor and front wall respectively. Additionally, each arm is now color-coded (left arm is red and right arm is blue) and is responsible for punching cubes of the corresponding color. If a mismatch occurs, an error sound is played (I promise it is a lot quieter than what the video below outputs). Originally I planned on incorporating beat detection into the game by now. Since Easter break was right after walkthroughs and I felt like I needed a break, I essentially swapped the time slot I allocated for beat detection with the time slot for additional features. Now that my game looks prettier, I will try and incorporate beat detection.

Over the past week I’ve spent time completing the game loop. After the main menu was completed (as of my last blog post), I first started by creating an end of song menu, which displays the score the player got and a button to go back to the main menu. I used a quick, ten second audio track as my selected song to make programming go by much quicker (what a pain if I waited three or more minutes for a regular song!).

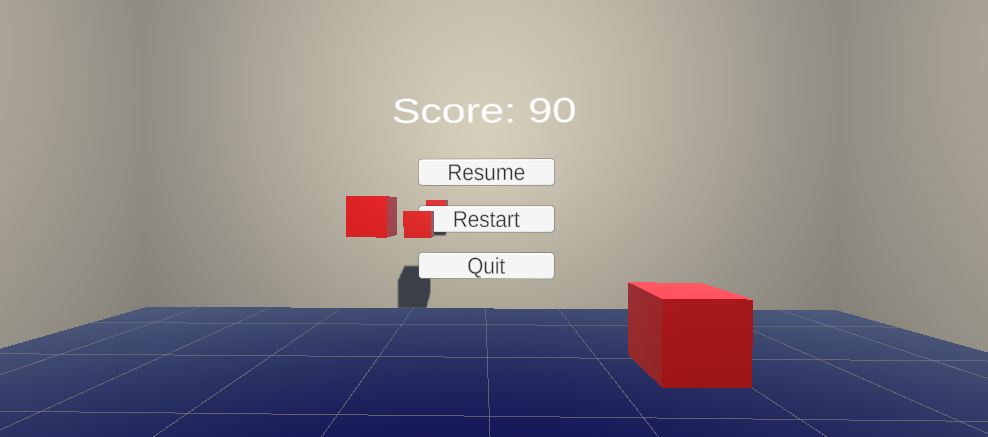

As you can see, I also have a “Play Again” button. This was added after the I created the pause menu, which I will show next. The pause menu is brought up when the player hits the primary button on either of their VR controllers (on my Oculus Touch controllers this is the ‘X’ button on the left controller and the ‘A’ button on the right controller). It features a resume button which unpauses the game, a restart button which restarts the song, and a quit button, which brings the player back to the main menu. I am yet to decide if I want to hide the cubes when the game is paused. Moving on, after creating the restart on-click function for the restart button, I was able to create the “Play Again” button on the end of song menu and reuse this function, only having to modify it slightly. Lastly, a textbox displays the score at all times during the game, whether or not the game is paused.

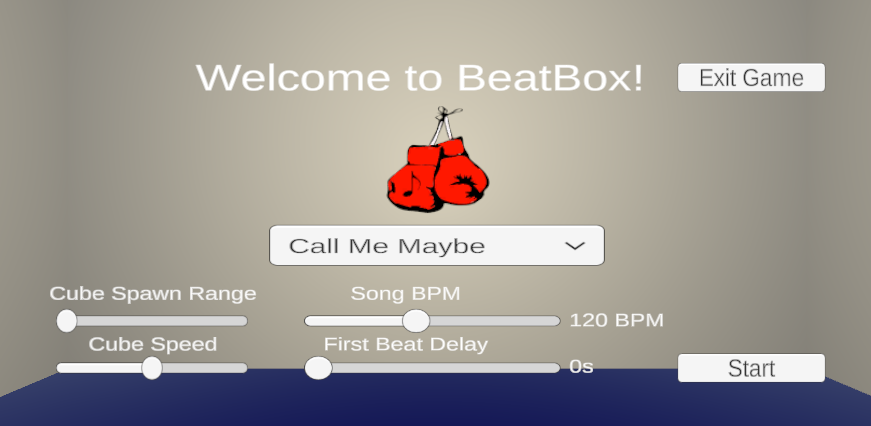

Finally, what about the main menu? The main menu screen hasn’t changed much since the last blog post, but there are noteworthy changes. The biggest change you may have noticed is my new, beautiful logo for the game (also on the end of song screen)! I’ve also created an “Exit Game” button. Clicking this kills the game application.

Next on my plate is incorporating beat detection! I will be drudging through arguably too much math to try and make an accurate beat detection algorithm. It’s not going to be easy.

Since my last update two weeks ago, I have learned a lot about how UI is done in VR applications within Unity. I was able to create the entire main menu, including difficulty adjustments and song selection capabilities. I have yet to create a pause menu, in-game score display, and end of song menu, which will be my next goal. I have fallen a little behind on schedule since midterms were last week. Because of this, I wasn’t able to work on the project as much as I would have liked. On the bright side, my schedule is now two classes lighter, which gives me plenty of time to catch up. Below is a video displaying the in-game song selection and using the main menu to tailor the game to the song.

On another note, I met with Dr. Pankratz Thursday, March 11th to discuss beat detection. Everywhere you look for beat detection online you’re sure to encounter Fast Fourier transform (FFT). We discussed this and the concept of breaking up audio into different frequency bands, and using these bands to determine what constitutes a beat. I told DCP of the beat detection algorithm I found that I incorporated into my project. I expressed that I wasn’t super pleased with how it worked, but I was happy that I have something. After this, we talked about having two separate modes; one for beat detection, which may or may not work well for certain songs, and one for beats per minute, which will spawn cubes at a constant rate the entire song. Once I finish the rest of the UI and start working on beat detection more, this would be as easy as throwing two radio buttons on the main menu screen and polling a game mode flag when the game runs.

Since I figured out hand collision a bit sooner than I thought I would, I decided to start looking into beat detection. I met with Dr. Meyer, a math professor at St. Norbert, on Tuesday, 3/2 to discuss audio compression (codecs, compression, decompression). We then discussed the options I have for incorporating beat detection into my game. Essentially I can import a .dll (dynamic-link library) into my project and call functions that other people have written already, or I can make my own functions. Since I want to incorporate beat detection into my game in a few weeks, I decided to experiment this week and see if I could research beat detection and make my own functions within my game project. There are many resources out on the web, but many of these resources are part of forum posts that are years old. Multiple websites have referenced an article written by someone hosted on a different website that has long been deleted (darn!). I have found a couple of recent posts on beat detection and have tried to adjust code that other people have written for my specific use-case. I have been successful with one snippet of code I have found, but it doesn’t work nearly as well as I’d like it too. Then, I spent several hours trying to write my own beat detection algorithm from scratch but it doesn’t seem to work quite yet. I started to stress out about this since I don’t have much to show for the last week, but kept reminding myself that I have plenty of time still, and that at least the snippet of code that I implemented in my project worked somewhat. I can always fall back on that if I can’t figure out my own algorithm.

After exploring beat detection this past week, I looked up a couple of videos on creating a UI within a VR environment in Unity. So far I’ve made a UI canvas in my project and added a single toggle button to it. This shows up in game, but now I need to find out how to interact with it with the controller. This is my next goal, and once I do this, I will be able to add more UI elements to the canvas and write functions that are called when these elements are interacted with.

Over the weekend I worked quite a lot on the project. I created a blocky arm model that maps to the VR controllers. Because of this, I was able to attach a collider to the “hand” and successfully test for collisions with the moving cubes. As of now, I have a variable for score that goes up by one for each block hit (as seen in the console window within Unity in the attached video). If blocks are not hit, they despawn shortly after they pass the player. In the future I will make scoring more interesting. For now, I have hardcoded Carly Rae Jepsen’s Call Me Maybe as the song that plays after a two second delay. Within the game object that controls the cubes spawning I have hardcoded the spawn time variable to be 0.5 since Call Me Maybe is 120 beats per minute (one cube every half second). I chose Call Me Maybe to test because it has a constant beat (except right at the end), 0.5 is a nice, round number for spawning the cubes, and because the song slaps. Once I learn more about beat detection, I plan on storing the milliseconds between each beat of the song in an array, and this spawn time variable will use these millisecond values for each succeeding beat. This will account for songs that speed up or slow down. To make sure the song is synced with when players hit the cubes, all I have to do is wait to start playing the music until the first cube is about an arm’s length away. For my next step, I plan on creating the user interface. While I am doing this I also plan on looking into beat detection, as that is the next step after creating the UI. Here is a live demo of the project so far! Enjoy.

On a side note, I also migrated my project from an older version of Unity. This enabled me to install the OpenVR plugin to my project, which lets the game run through SteamVR. This allows a wide range of headsets (other than my Oculus Rift) to be able to play the game. One side effect of doing this, however, was that my controller model that is mapped to the VR controllers was rotated 90 degrees. So, when the game would start the blocky controller models would be pointing straight up instead of pointing away from me. To fix this, I had to adjust the positioning and rotation of the two blocks within the model asset itself. An adequate amount of time was spent doing all of this, but now my game can be played on every mainstream VR headset. Woohoo!

In the past week I have watched several Unity tutorials on different concepts that I will need to understand as I make my game. I have also discussed with several of my classmates and even with my roommates about my project and certain aspects of it. I spoke with Sam Spika, a fellow classmate, about the basics of Unity, as he has told me that he has a little experience with the engine. He was able to help me understand the concept of a game controller object, as well as scripting within the game engine in general. This helped my confidence a lot! Lastly, I haven’t looked into finding an algorithm for determining the beat of an audio file, but I do have an idea in my mind on how I want the data to look like for each audio file saved in the game folder once I get there.

Today, Thursday, 2/18/2021, we had multiple group sessions in which we all gave each other feedback. I thoroughly enjoyed both giving feedback to those I reviewed as well as receiving feedback from those who reviewed me. There were plenty of good suggestions that I hadn’t thought about for my project, and I hope I can implement some of them if I have the time to do so. My goal for this next week is to solidify my plan for the rest of the semester, with milestones every week or two. Another goal of mine is to set up the game so that the objects that the users will “hit” in-game (these will most likely be cubes) continuously spawn and move towards the user, while de-spawning once they pass the user.